AI Therapy And The Surveillance State: A Critical Examination

Table of Contents

Data Collection and Privacy Concerns in AI Therapy

AI therapy platforms collect vast amounts of personal data, raising significant privacy concerns. This data, crucial for training algorithms and personalizing treatment, includes highly sensitive information. Understanding the scope of this data collection and the potential for misuse is paramount.

The Scope of Data Collection

AI therapy applications gather a wide array of personal data, far beyond what traditional therapy might involve. This includes:

- Speech patterns: Analysis of tone, pace, and word choice reveals emotional states and underlying concerns.

- Emotional responses: Facial expressions, physiological data (if collected), and textual responses are analyzed to gauge emotional well-being.

- Personal history: Detailed information about life experiences, relationships, and mental health history is often required.

- Treatment progress: The platform tracks user engagement, response to interventions, and overall progress in therapy.

- Geolocation data: Some apps track location, potentially revealing sensitive information about the user's environment and activities.

The potential for misuse of this sensitive data is considerable. Data breaches, unauthorized access, and the use of this information for purposes unrelated to therapeutic benefit represent significant risks. The potential for this data to be used for targeted advertising, profiling, or even discriminatory practices is a major cause for concern.

Data Security and Encryption

Robust data security and encryption are crucial for protecting user data in AI therapy. However, the ever-evolving landscape of cyberattacks poses a constant threat. Many platforms lack transparency regarding their specific security protocols, leaving users uncertain about the safety of their highly sensitive information. Further, current regulations often lag behind technological advancements, creating significant regulatory gaps in data protection for AI therapy platforms. The need for stricter regulations and greater transparency is clear.

Informed Consent and User Awareness

True informed consent requires users to fully understand the data collection practices of AI therapy platforms. However, complex privacy policies and a lack of user understanding often hinder this. To address this:

- Clear and concise privacy policies: Platforms must provide readily accessible and easily understandable information regarding data usage and sharing practices.

- Educational initiatives: Public awareness campaigns are needed to educate users about the privacy implications of using AI-powered therapy tools.

Algorithmic Bias and Discrimination in AI Therapy

The algorithms powering AI therapy are trained on data, and if that data reflects existing societal biases, the algorithm will likely perpetuate and amplify those biases. This can lead to discriminatory outcomes and unequal access to effective mental healthcare.

Potential for Bias in AI Algorithms

AI algorithms, trained on potentially biased datasets, may exhibit biases that affect their diagnostic accuracy and treatment recommendations. This can disproportionately impact marginalized communities.

- Impact on marginalized communities: Individuals from racial or ethnic minorities, LGBTQ+ individuals, or those with disabilities may receive inaccurate or inappropriate treatment due to algorithmic bias.

- Addressing algorithmic bias: Creating diverse and representative datasets for algorithm training, along with rigorous testing for bias, is crucial for mitigating these issues. This requires conscious effort to include data from a wide range of backgrounds and experiences.

Lack of Human Oversight and Accountability

Over-reliance on AI algorithms without sufficient human oversight can lead to serious errors and inappropriate treatment recommendations. Establishing clear lines of accountability is vital.

- Importance of human-in-the-loop systems: Maintaining a critical role for human clinicians in the AI therapy process is essential to ensure accuracy and responsible decision-making.

- Establishing clear lines of accountability: Clear guidelines must be established to determine responsibility for any harm or inaccuracies caused by the AI system.

The Surveillance State and the Potential for Misuse

The data collected by AI therapy platforms could be accessed and misused by third parties, raising concerns about surveillance and discrimination.

Monitoring and Data Sharing with Third Parties

The potential for data sharing with insurance companies, employers, or law enforcement raises serious ethical concerns.

- Erosion of patient confidentiality: Sharing sensitive mental health information without explicit and informed consent undermines patient confidentiality and trust.

- Need for stringent data protection regulations: Robust regulations are crucial to prevent unauthorized access and misuse of data collected by AI therapy platforms.

The Chilling Effect on Self-Disclosure

Concerns about surveillance and data sharing could deter individuals from seeking help, potentially harming their mental health.

- Impact on vulnerable populations: Individuals from already marginalized groups may be particularly hesitant to disclose sensitive information due to fear of negative consequences.

- Balancing therapeutic benefit with privacy concerns: Striking a balance between the benefits of AI therapy and the need to protect user privacy is a critical challenge.

Conclusion

AI therapy holds immense promise for improving mental healthcare access. However, the potential for misuse and the erosion of privacy raise serious ethical and societal concerns. Addressing challenges related to data privacy, algorithmic bias, and the potential for surveillance is crucial for responsible AI therapy development and deployment. Prioritizing user privacy, informed consent, and transparency, along with establishing clear accountability, is paramount. Further research and robust regulation are urgently needed to navigate the complex intersection of AI therapy and the surveillance state. We must work together to ensure that this innovative technology benefits society while upholding fundamental human rights. Let’s ensure the responsible development and use of AI therapy, safeguarding both mental health and individual liberties.

Featured Posts

-

The Role Of Jeremy Arndt In Bvgs Negotiation Process

May 16, 2025

The Role Of Jeremy Arndt In Bvgs Negotiation Process

May 16, 2025 -

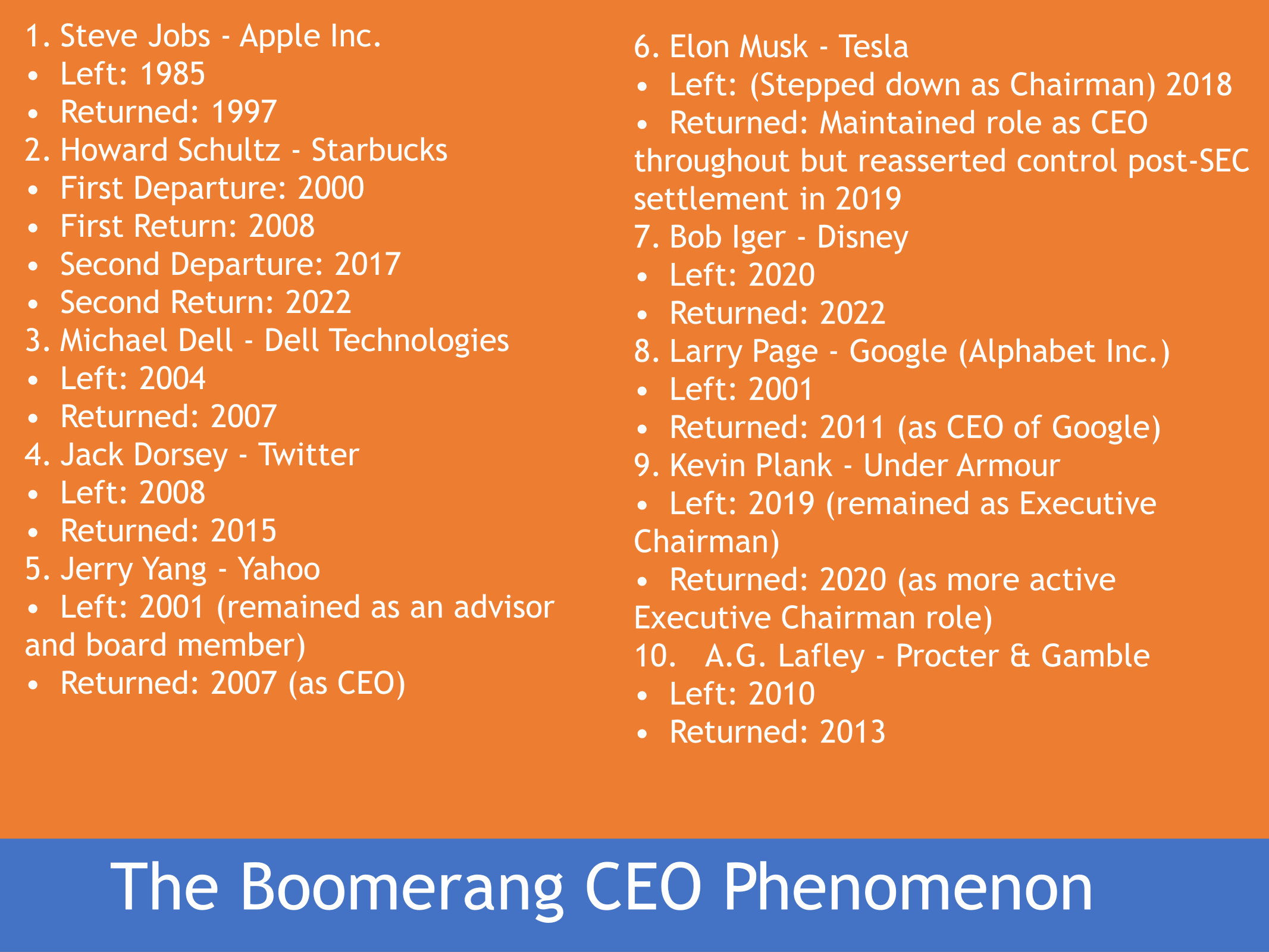

Stephen Hemsleys Return To United Health Can A Boomerang Ceo Deliver

May 16, 2025

Stephen Hemsleys Return To United Health Can A Boomerang Ceo Deliver

May 16, 2025 -

Michael Chandler Paddy Pimbletts Ufc 314 Fight Will Be A Tough Test

May 16, 2025

Michael Chandler Paddy Pimbletts Ufc 314 Fight Will Be A Tough Test

May 16, 2025 -

Elaqt Twm Krwz Wana Dy Armas Hqyqt Alshayeat Wakhtlaf Alaemar

May 16, 2025

Elaqt Twm Krwz Wana Dy Armas Hqyqt Alshayeat Wakhtlaf Alaemar

May 16, 2025 -

Brunsons Injury A Critical Look At The Knicks Offensive Vulnerability

May 16, 2025

Brunsons Injury A Critical Look At The Knicks Offensive Vulnerability

May 16, 2025