Analysis Of Thompson's Monte Carlo Performance

Table of Contents

Understanding the Mechanics of Thompson Sampling in Monte Carlo Simulations

Thompson Sampling, a Bayesian approach to the multi-armed bandit problem, elegantly integrates into Monte Carlo simulations. It leverages Bayesian updating to learn about the underlying probability distributions governing the outcomes of different actions. Instead of directly selecting actions based on their estimated expected rewards (like in ε-greedy methods), Thompson Sampling samples from the posterior distribution of these rewards for each action. The action with the highest sampled reward is then selected.

Let's illustrate with a simple example: Imagine choosing between two slot machines with unknown payout probabilities. Thompson Sampling starts with prior distributions representing our initial beliefs about these probabilities (e.g., Beta distributions). After each pull, the posterior distribution is updated using Bayes' theorem, reflecting our improved knowledge. Subsequently, a reward is sampled from each machine's updated posterior distribution; the machine with the higher sampled reward is chosen for the next pull.

- Bayesian Updating: This iterative process refines our understanding of the reward distributions with each observation.

- Sampling from Posterior Distribution: The act of sampling introduces randomness, crucial for exploration and preventing premature convergence to suboptimal actions.

- Prior Distributions: The choice of prior significantly influences early exploration. Informative priors can guide the algorithm, while uninformative priors allow for unbiased exploration.

- Action Selection: The action chosen is the one with the highest sampled reward, balancing exploration and exploitation.

Evaluating Thompson Sampling's Performance Metrics

Evaluating the effectiveness of Thompson Sampling in Monte Carlo simulations requires a careful consideration of several key performance metrics:

- Regret: Regret measures the cumulative difference between the rewards obtained by the algorithm and the rewards that could have been obtained by consistently choosing the optimal action. Lower regret indicates better performance.

- Convergence Rate: This refers to how quickly the algorithm converges to a near-optimal strategy, i.e., how quickly the regret growth slows down. Faster convergence is desirable.

- Computational Complexity: The algorithm's efficiency and scalability are crucial, especially for high-dimensional problems. Computational complexity analysis determines the algorithm's feasibility for large-scale applications.

- Sensitivity to Prior Distributions: The choice of prior distributions can significantly influence the algorithm's performance, particularly in early stages. Robustness to prior specification is a valuable characteristic.

Comparative Analysis: Thompson Sampling vs. Other Monte Carlo Techniques

Thompson Sampling offers several advantages over other popular Monte Carlo methods like ε-greedy and Upper Confidence Bound (UCB) algorithms, particularly in scenarios with complex reward distributions and significant uncertainty.

- Scenarios where Thompson Sampling excels: Thompson Sampling is especially effective in problems with non-stationary reward distributions, where the optimal action can change over time, and in situations requiring robust exploration.

- Scenarios where other methods might be preferable: ε-greedy might be computationally simpler for very basic problems, while UCB can be more efficient in some settings with stationary rewards.

- Relevant research and empirical findings: Numerous studies have shown Thompson Sampling's superior performance, particularly in complex reinforcement learning problems and multi-armed bandit scenarios involving high dimensionality and non-stationarity.

Practical Applications and Case Studies of Thompson Sampling Monte Carlo

Thompson Sampling's effectiveness is demonstrated in numerous real-world applications:

- Clinical Trials: Optimizing treatment strategies by dynamically allocating patients to different treatments based on observed outcomes.

- Finance (Portfolio Optimization): Developing dynamic trading strategies that adapt to changing market conditions.

- Robotics (Path Planning): Finding optimal paths in uncertain environments by balancing exploration of new routes with exploitation of known paths.

Conclusion: Optimizing Your Monte Carlo Simulations with Thompson Sampling

This analysis has highlighted the significant potential of Thompson Sampling in enhancing the performance of Monte Carlo simulations. While it offers advantages in handling uncertainty and complex reward distributions, careful consideration of its computational complexity and sensitivity to prior distributions is crucial. By understanding these aspects and selecting appropriate priors, you can leverage Thompson Sampling to achieve significant improvements in your Monte Carlo simulations. To improve your Thompson Sampling Monte Carlo implementation and optimize your Monte Carlo performance with Thompson Sampling, explore its capabilities within your specific application context. Start experimenting with Thompson Sampling today and unlock the potential for more efficient and accurate results in your Monte Carlo simulations.

Featured Posts

-

L Etoile De Mer Un Symbole Pour La Reconnaissance Des Droits De La Nature

May 31, 2025

L Etoile De Mer Un Symbole Pour La Reconnaissance Des Droits De La Nature

May 31, 2025 -

Daily Press Almanac Local News Sports Updates And Job Postings

May 31, 2025

Daily Press Almanac Local News Sports Updates And Job Postings

May 31, 2025 -

Indian Wells Surprise Griekspoor Upsets Zverev

May 31, 2025

Indian Wells Surprise Griekspoor Upsets Zverev

May 31, 2025 -

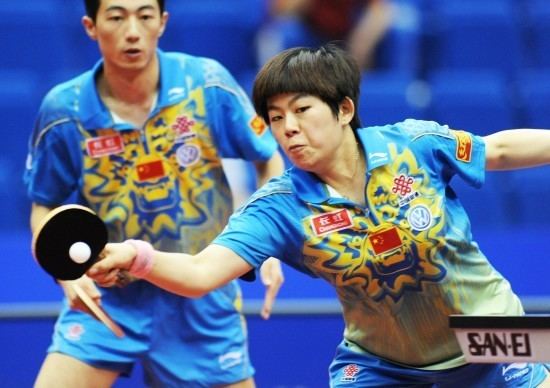

Table Tennis Worlds Wang Sun Secure Third Straight Mixed Doubles Gold

May 31, 2025

Table Tennis Worlds Wang Sun Secure Third Straight Mixed Doubles Gold

May 31, 2025 -

Analysis Slight Increase In Covid 19 Infections In India And The Xbb 1 16 Variant

May 31, 2025

Analysis Slight Increase In Covid 19 Infections In India And The Xbb 1 16 Variant

May 31, 2025