ChatGPT Under FTC Scrutiny: Privacy Concerns And Regulatory Challenges

Table of Contents

FTC's Investigation into ChatGPT: Data Privacy Violations

The FTC, responsible for protecting consumers from unfair or deceptive business practices, including those involving data privacy, has launched an investigation into ChatGPT's data handling practices. This investigation stems from growing concerns regarding the potential for data privacy violations related to the vast amounts of data used to train and operate the AI model. The FTC's authority extends to ensuring companies comply with existing laws such as the Children's Online Privacy Protection Act (COPPA) and the California Consumer Privacy Act (CCPA), amongst others.

Potential privacy violations under scrutiny include:

- Data collection practices and transparency: The extent and nature of data collected by ChatGPT, and the clarity with which this is communicated to users, are key areas of concern. The lack of transparency regarding what data is collected and how it's used raises serious questions.

- Data security measures and potential vulnerabilities: The security measures implemented to protect user data from unauthorized access, breaches, or misuse are being assessed. The potential vulnerabilities within the system are of significant interest to the FTC.

- Compliance with existing privacy laws (e.g., COPPA, CCPA): The FTC will examine whether ChatGPT's data handling complies with existing federal and state privacy regulations, particularly concerning children's data and California residents' rights.

- Use of personal data for training and model improvement: The investigation will likely focus on how personal data is used to train and improve the ChatGPT model, and whether this usage aligns with user expectations and legal requirements.

- Potential for misuse of collected data: The FTC will analyze the potential risks of misuse of collected data, including the risk of unauthorized disclosure, identity theft, or discriminatory outcomes.

Specific examples of reported issues, while not yet publicly released by the FTC, are likely to be part of the ongoing investigation. We will update this article as more information becomes available. [Link to FTC website]

Regulatory Challenges Facing Generative AI Like ChatGPT

The rapid advancement of generative AI, exemplified by ChatGPT, presents novel regulatory challenges. The technology's novelty means existing legal frameworks may not adequately address the unique privacy and ethical concerns it raises. Balancing the fostering of innovation with the protection of consumer rights is a complex task demanding careful consideration.

Key regulatory hurdles include:

- Defining "personal data" in the context of AI training data: The very definition of "personal data" needs to be clarified in the context of AI training datasets, which often contain anonymized or aggregated information.

- Establishing accountability for AI-generated outputs: Determining accountability when AI models generate inaccurate, biased, or harmful content remains a significant challenge.

- Ensuring transparency and explainability in AI decision-making: Understanding how AI models arrive at their conclusions is crucial for trust and accountability. This “black box” nature of some AI systems poses a challenge for regulators.

- Addressing bias and discrimination in AI models: AI models trained on biased data can perpetuate and amplify existing societal biases. Regulating to mitigate this risk is a critical concern.

- International harmonization of AI regulations: The global nature of AI necessitates international cooperation to establish consistent and effective regulations.

Ongoing legislative efforts, both in the US and internationally, aim to address these challenges, but the pace of technological advancement often outstrips the development of regulatory frameworks.

The Impact on ChatGPT's Future and the Broader AI Industry

The FTC investigation could have profound consequences for ChatGPT's future development and deployment. Depending on the findings, OpenAI might face significant fines, operational restrictions, or mandated changes to its data handling practices. This, in turn, will have a ripple effect on the broader AI industry, prompting other companies to re-evaluate their own data practices and risk management strategies. Consumer trust in AI technologies could also be significantly impacted, affecting adoption rates and market growth.

Best Practices for Data Privacy in AI Development

To mitigate privacy risks, companies developing and deploying AI models should adopt best practices, including:

- Data minimization and purpose limitation: Collect only the data necessary for the intended purpose and use it only for that purpose.

- Robust data security measures (encryption, access controls): Implement strong security measures to protect data from unauthorized access, breaches, and misuse.

- Transparent data collection and usage policies: Clearly communicate to users what data is collected, how it’s used, and with whom it’s shared.

- User consent and control over data: Obtain informed consent from users before collecting and using their data, and provide them with mechanisms to control their data.

- Regular audits and compliance assessments: Conduct regular audits to ensure compliance with data privacy regulations and identify potential vulnerabilities.

Companies like Google and Microsoft, already operating within the AI landscape, demonstrate the proactive implementation of many of these practices. However, continuous vigilance and adaptation are critical in this rapidly evolving field.

Conclusion

The FTC's scrutiny of ChatGPT highlights the crucial need for responsible AI development and robust data privacy measures. The investigation underscores the significant regulatory challenges facing the burgeoning generative AI industry. The potential for data privacy violations, coupled with the need for clear accountability and transparency, necessitates a proactive approach to data governance. Stay updated on the latest developments in AI regulation and advocate for ethical AI practices. Understanding the implications of the FTC's actions on ChatGPT is crucial for the future of AI. [Link to FTC website] [Link to relevant news article]

Featured Posts

-

Ftc To Appeal Activision Blizzard Acquisition Decision

Apr 29, 2025

Ftc To Appeal Activision Blizzard Acquisition Decision

Apr 29, 2025 -

Global Competition Heats Up The Race To Attract Us Researchers Post Funding Cuts

Apr 29, 2025

Global Competition Heats Up The Race To Attract Us Researchers Post Funding Cuts

Apr 29, 2025 -

Missing British Paralympian Las Vegas Police Appeal For Information

Apr 29, 2025

Missing British Paralympian Las Vegas Police Appeal For Information

Apr 29, 2025 -

Solving The Nyt Spelling Bee February 25 2025 Puzzle And Spangram

Apr 29, 2025

Solving The Nyt Spelling Bee February 25 2025 Puzzle And Spangram

Apr 29, 2025 -

Why You Tube Is Becoming A Go To For Older Viewers Entertainment

Apr 29, 2025

Why You Tube Is Becoming A Go To For Older Viewers Entertainment

Apr 29, 2025

Latest Posts

-

Unexpected Family Ties Nba Legend And Ru Pauls Drag Race Contestant

Apr 30, 2025

Unexpected Family Ties Nba Legend And Ru Pauls Drag Race Contestant

Apr 30, 2025 -

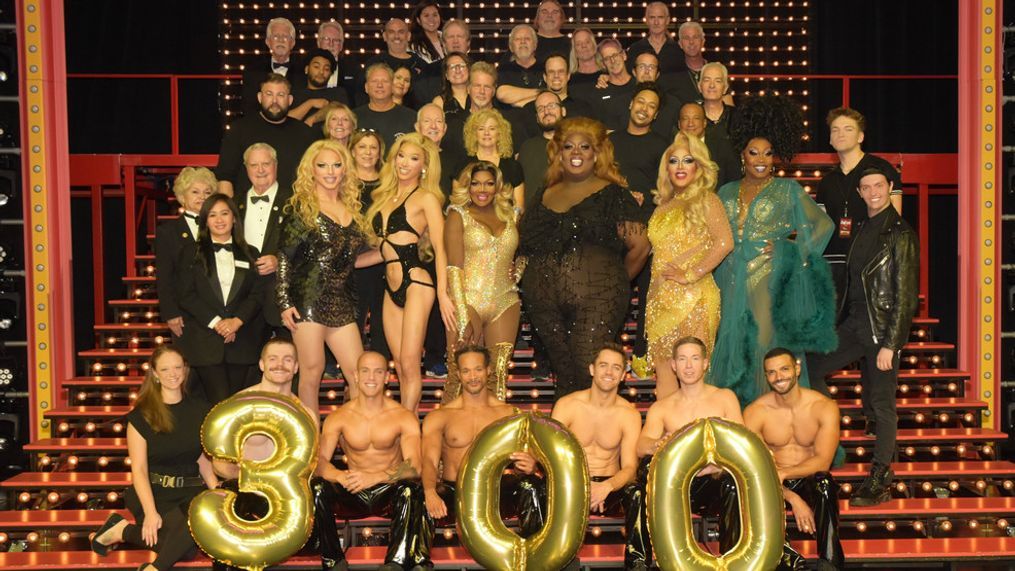

Watch Ru Pauls Drag Race Live 1000th Show Global Livestream From Vegas

Apr 30, 2025

Watch Ru Pauls Drag Race Live 1000th Show Global Livestream From Vegas

Apr 30, 2025 -

Ru Pauls Drag Race Uncovering An Nba Legends Secret Paternity

Apr 30, 2025

Ru Pauls Drag Race Uncovering An Nba Legends Secret Paternity

Apr 30, 2025 -

Ru Pauls Drag Race Live Las Vegas 1000th Show Global Broadcast

Apr 30, 2025

Ru Pauls Drag Race Live Las Vegas 1000th Show Global Broadcast

Apr 30, 2025 -

Global Livestream Ru Pauls Drag Race Live Hits 1000 Shows In Vegas

Apr 30, 2025

Global Livestream Ru Pauls Drag Race Live Hits 1000 Shows In Vegas

Apr 30, 2025