Debunking The Myth Of AI Learning: A Practical Guide To Responsible AI

Table of Contents

Understanding the Reality of AI Learning

AI as a Tool, Not a Mind

It's crucial to understand that AI systems, even those employing sophisticated machine learning techniques, are tools trained on data, not sentient beings with independent learning capabilities. The "learning" in AI learning refers to algorithms adjusting their parameters based on input data to improve performance on specific tasks. This is fundamentally different from human learning, which involves understanding, reasoning, and adaptation in far more complex ways.

- The role of data in training AI: AI models are trained on vast amounts of data, and their performance is directly dependent on the quality and representativeness of this data. Garbage in, garbage out, as the saying goes.

- Limitations of current AI: Current AI systems excel at specific, well-defined tasks but lack the general intelligence and adaptability of humans. They cannot independently learn to perform new tasks outside their training domain.

- Machine learning vs. human intelligence: Machine learning focuses on identifying patterns and making predictions based on data, while human intelligence encompasses a far broader range of cognitive abilities, including reasoning, creativity, and emotional intelligence. The difference is significant when discussing responsible AI.

The Importance of Human Oversight

The development and deployment of AI systems require significant human involvement. Human programmers, engineers, and ethicists play a crucial role in designing, training, and monitoring AI systems to ensure responsible AI. AI learning is not a self-directed process.

- Bias detection and mitigation: AI models can inherit and amplify biases present in the training data, leading to unfair or discriminatory outcomes. Human oversight is crucial in detecting and mitigating these biases.

- Ethical considerations in AI development: Ethical considerations must be integrated throughout the AI lifecycle, from data collection to deployment and monitoring. This includes considering the potential societal impact and ensuring fairness, transparency, and accountability.

- Ongoing human monitoring and intervention: Even after deployment, AI systems require continuous monitoring and intervention to ensure they function as intended and do not produce unintended or harmful consequences.

Debunking Common Misconceptions about AI Learning

Myth #1: AI Learns Like Humans

A common misconception is that AI learns in the same way as humans. This is fundamentally incorrect. Human learning involves understanding, reasoning, and adaptation in a complex, context-dependent manner. AI learning, on the other hand, is based on statistical patterns and correlations within the data. Responsible AI development requires acknowledging this distinction.

- AI excels at specific tasks: AI can outperform humans in narrow domains like image recognition or game playing.

- AI struggles with generalization: AI models often struggle to generalize their knowledge to new, unseen situations, unlike humans who can readily apply learned concepts to novel contexts.

- Transfer learning limitations: While transfer learning allows applying knowledge from one task to another, it is still limited and requires careful human oversight.

Myth #2: AI is Self-Improving

Another misconception is that AI systems autonomously improve without human intervention. While AI models can adjust their parameters based on data, they do not self-improve in a meaningful sense. Continuous monitoring, retraining, and updates are essential for maintaining accuracy and preventing degradation.

- Model retraining necessity: AI models need to be regularly retrained with new data to maintain accuracy and adapt to changing conditions.

- Challenges of maintaining accuracy over time: The performance of AI models can degrade over time due to concept drift (changes in data distribution) or other factors.

- Model decay: This refers to the gradual decline in performance of AI models over time, highlighting the need for continuous monitoring and retraining within responsible AI practices.

Myth #3: AI is Objective and Unbiased

AI systems are not inherently objective or unbiased. Biases present in the training data can be amplified and reflected in the AI's outputs. This underscores the critical role of diverse and representative datasets and meticulous data curation. Responsible AI requires acknowledging and mitigating this inherent risk.

- Common biases in AI: Gender, racial, and socioeconomic biases are frequently encountered in AI systems.

- Techniques for mitigating bias: Methods for mitigating bias include data augmentation, algorithmic fairness techniques, and careful selection of training data.

- Algorithmic transparency: Transparency in AI algorithms helps identify and address potential sources of bias.

Practical Steps Towards Responsible AI Learning

Data Governance and Bias Mitigation

Creating ethical and unbiased datasets is paramount for responsible AI learning. This involves rigorous data governance practices and proactive bias mitigation strategies.

- Data cleaning and pre-processing: Removing or correcting errors and inconsistencies in data is crucial for improving AI model accuracy and reducing bias.

- Identifying and mitigating biases: Employing various techniques to detect and mitigate biases in the data is critical for ensuring fair and equitable AI outcomes.

- Diverse teams in data collection and analysis: Including diverse perspectives in data collection and analysis can help identify and address potential biases.

Transparency and Explainability

Transparency and explainability are crucial aspects of responsible AI. Understanding how AI models arrive at their decisions is essential for accountability and trust.

- Techniques for interpreting AI model outputs: Employing methods to explain the reasoning behind AI model predictions increases transparency and understanding.

- Model explainability for accountability: Explainable AI (XAI) enables identifying and addressing potential biases or errors.

- Clear documentation and communication: Documenting AI development processes and communicating findings transparently fosters trust and accountability.

Continuous Monitoring and Evaluation

Continuous monitoring and evaluation of AI systems are essential for ensuring responsible use and preventing unintended consequences.

- Monitoring AI performance: Regularly assessing the performance of AI systems helps identify potential issues and ensures they continue to meet expectations.

- Feedback loops: Establishing feedback loops between AI systems and users allows for continuous improvement and adaptation.

- Addressing unexpected outcomes: Developing strategies for handling unexpected or unintended outcomes is crucial for responsible AI deployment.

Conclusion

The myth of AI learning as an autonomous, self-improving process is misleading. AI systems are powerful tools, but their learning relies heavily on human input, oversight, and responsible development practices. By understanding the realities of AI learning and adopting the practical steps outlined in this guide—from data governance and bias mitigation to transparency and continuous monitoring—we can harness the power of AI while mitigating its potential risks. By understanding the realities of AI learning and adopting responsible practices, we can harness the power of AI while mitigating its potential risks. Learn more about responsible AI development and debunking the myth of AI learning through continued research and ethical considerations. Explore resources on [link to relevant resource 1] and [link to relevant resource 2] to deepen your understanding of responsible AI.

Featured Posts

-

Latest On Parker Meadows A Detroit Tigers Notebook Update

May 31, 2025

Latest On Parker Meadows A Detroit Tigers Notebook Update

May 31, 2025 -

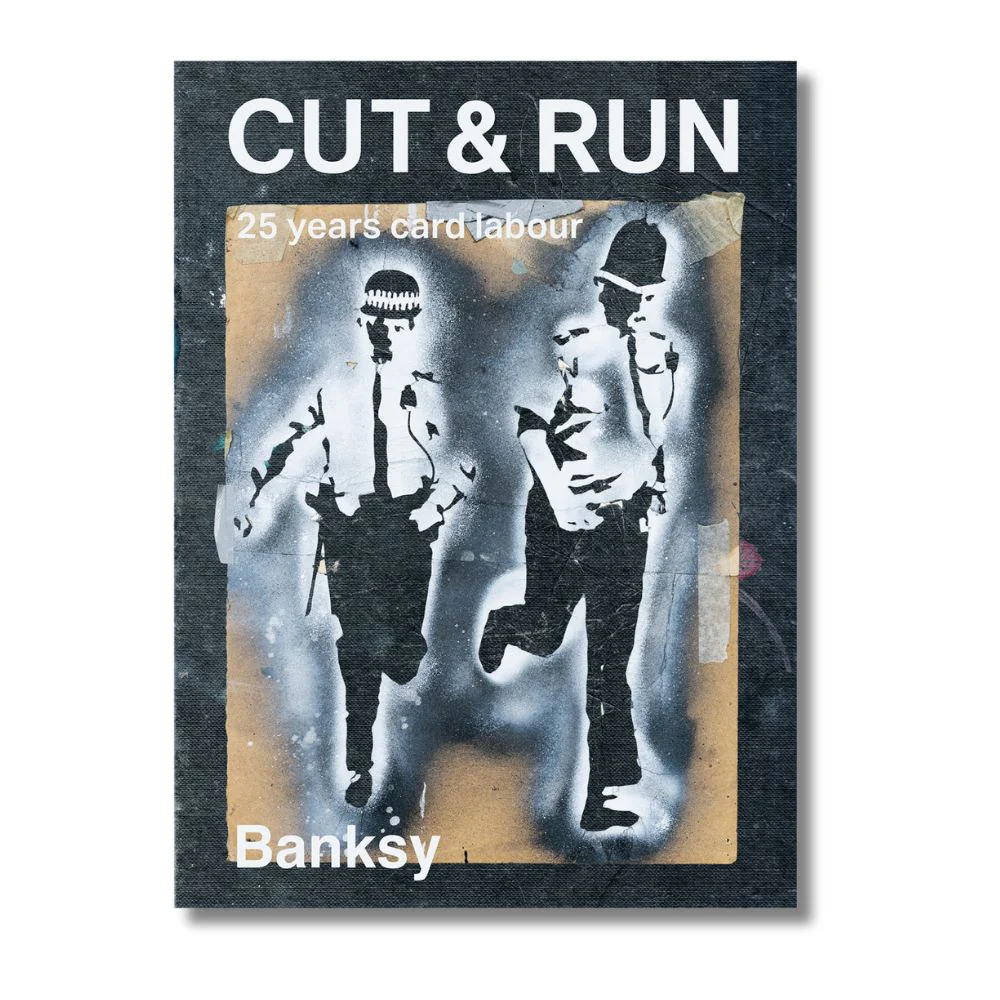

Banksy On Your Wall A Practical Guide

May 31, 2025

Banksy On Your Wall A Practical Guide

May 31, 2025 -

Menu De Emergencia 4 Recetas Deliciosas Sin Luz Ni Gas

May 31, 2025

Menu De Emergencia 4 Recetas Deliciosas Sin Luz Ni Gas

May 31, 2025 -

50 Yil Sonra Guelsen Bubikoglu Nun Tuerker Inanoglu Icin Yazdiklari

May 31, 2025

50 Yil Sonra Guelsen Bubikoglu Nun Tuerker Inanoglu Icin Yazdiklari

May 31, 2025 -

Ben Shelton Through To Munich Semifinals After Darderi Win

May 31, 2025

Ben Shelton Through To Munich Semifinals After Darderi Win

May 31, 2025