Federal Trade Commission Launches Probe Into OpenAI And ChatGPT

Table of Contents

The FTC's Investigation: Focus and Concerns

The FTC's investigation into OpenAI and ChatGPT centers on concerns surrounding potential violations of consumer protection laws. The agency is reportedly examining whether OpenAI's practices related to data privacy, algorithmic bias, and potential misleading claims about ChatGPT's capabilities comply with existing regulations. The FTC's stated aim is to ensure that AI technologies are developed and used responsibly, protecting consumers from potential harm.

Specific concerns detailed in various reports include:

- Data privacy violations: The FTC is investigating whether OpenAI's data collection and usage practices adequately safeguard user information. This includes concerns about the vast amounts of data used to train ChatGPT and the potential for unauthorized access or disclosure.

- Misleading consumers: The FTC is scrutinizing whether OpenAI has made misleading claims about ChatGPT's capabilities, accuracy, and reliability. Concerns exist regarding the potential for users to be misled by the chatbot's advanced functionalities.

- Algorithmic bias and discriminatory outputs: The FTC is reportedly examining whether ChatGPT exhibits biases that could lead to discriminatory or unfair outcomes. This is a significant concern given the potential for AI systems to perpetuate and amplify existing societal inequalities.

- Lack of sufficient safeguards: The agency is likely assessing whether OpenAI has implemented adequate safeguards to prevent the misuse of ChatGPT and similar AI technologies. This includes considering the potential for malicious actors to exploit the system for harmful purposes.

While neither the FTC nor OpenAI has publicly released detailed statements regarding the specifics of the investigation, the launch of the probe itself signals a significant shift in the regulatory approach towards generative AI.

Data Privacy Implications of ChatGPT and Similar AI Models

Large language models like ChatGPT are trained on massive datasets, often containing personal information and sensitive data scraped from the internet. This raises significant data privacy concerns. The sheer volume of data involved, coupled with the complexity of the models, makes it challenging to guarantee the protection of user privacy.

Potential risks to user privacy include:

- Data breaches and unauthorized access: The vast datasets used to train these models represent a potentially lucrative target for cyberattacks. A data breach could expose sensitive personal information.

- Inadvertent revelation of personal information: ChatGPT's ability to generate human-like text carries a risk of inadvertently revealing personal information from its training data, violating user privacy.

- Lack of transparency in data handling practices: The lack of transparency in how data is collected, used, and protected during the training and operation of large language models raises concerns about accountability and informed consent.

Existing regulations like GDPR in Europe and CCPA in California offer some framework, but the unique challenges presented by AI models require further development and refinement of data privacy laws and regulations specific to AI.

Algorithmic Bias and Fairness in AI Systems like ChatGPT

Algorithmic bias in AI systems, including ChatGPT, arises from biases present in the training data. If the training data reflects existing societal biases, the AI model will likely perpetuate and even amplify those biases in its outputs. This can lead to unfair or discriminatory outcomes, impacting various aspects of life.

Examples of potential bias include:

- Gender, racial, or ethnic biases: ChatGPT's responses may reflect or reinforce societal stereotypes related to gender, race, or ethnicity, leading to unfair or discriminatory outcomes.

- Reinforcement of existing societal inequalities: Biased AI systems can contribute to the perpetuation of existing societal inequalities, exacerbating existing problems.

- Difficulty in detecting and mitigating biases: Detecting and mitigating biases in complex AI systems is a challenging task, requiring sophisticated techniques and ongoing monitoring.

Addressing algorithmic bias requires a multi-faceted approach encompassing careful data curation, algorithmic design that mitigates bias, and ongoing monitoring and evaluation of AI systems' outputs. The ethical implications of biased AI cannot be ignored, demanding a commitment to fairness and equity in AI development.

The Future of AI Regulation in Light of the FTC's Probe

The FTC's investigation into OpenAI and ChatGPT is likely to have a significant impact on the future of AI regulation. It signals a growing recognition of the need for robust regulatory frameworks to address the ethical and societal challenges posed by advanced AI technologies.

Potential regulatory changes include:

- Increased scrutiny of data collection and usage practices: We can expect stricter regulations around data collection, storage, and usage practices related to AI development.

- Mandatory audits of AI systems for bias and fairness: Regular audits to detect and mitigate bias may become mandatory for certain types of AI systems.

- Development of clearer guidelines for responsible AI development and deployment: More comprehensive guidelines and standards for ethical AI development and deployment are likely to emerge.

While increased regulation might impact innovation in the short term, it is crucial for building public trust and ensuring responsible AI development. A balance needs to be struck between fostering innovation and protecting consumers and society from potential harms.

Conclusion

The FTC's investigation into OpenAI and ChatGPT is a critical step in addressing the ethical and societal challenges posed by rapidly advancing AI technologies. The probe highlights the urgent need for robust regulations and responsible development practices to ensure data privacy, mitigate algorithmic bias, and protect consumers. The investigation underscores the growing awareness of the potential risks associated with powerful AI systems and the importance of proactive measures to mitigate these risks.

Call to Action: Stay informed about the ongoing FTC investigation and the evolving regulatory landscape surrounding OpenAI, ChatGPT, and the broader field of artificial intelligence. Understanding the implications of this probe is crucial for anyone involved in the development, deployment, or use of AI technologies. Keep up-to-date on developments regarding the Federal Trade Commission and its ongoing investigation into OpenAI and ChatGPT.

Featured Posts

-

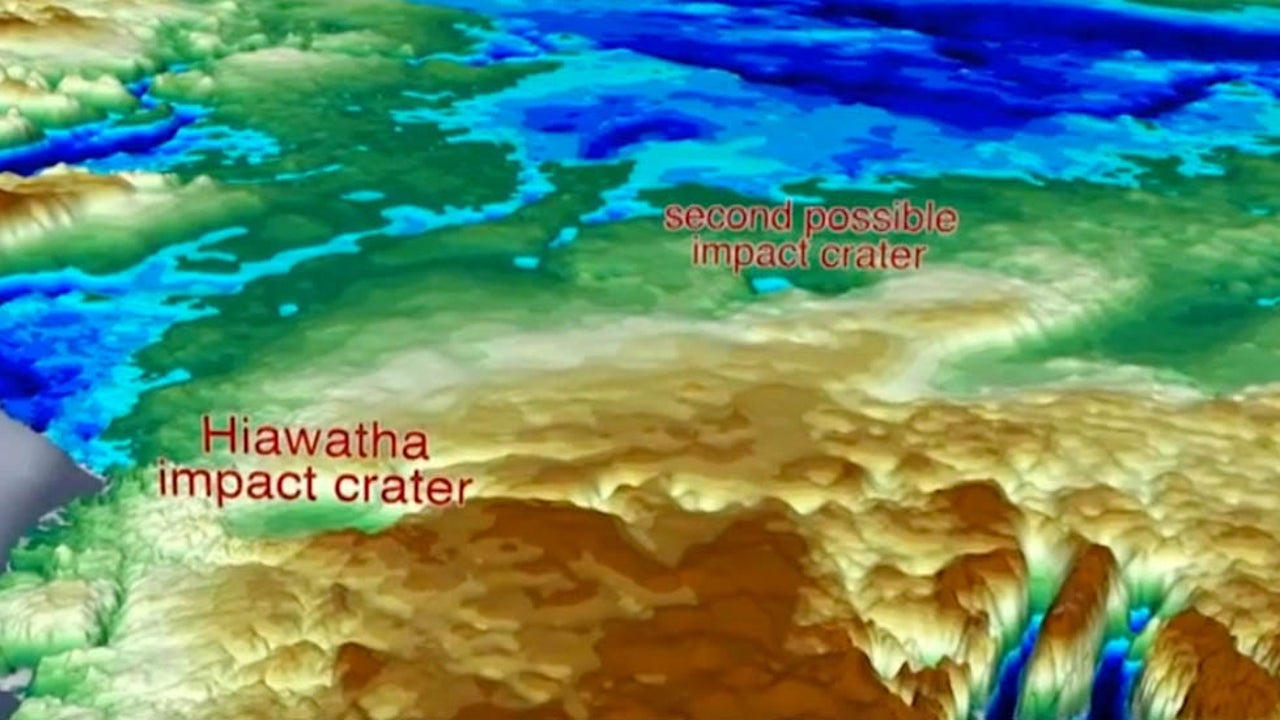

The U S Nuclear Base Under Greenlands Ice A Decades Long Secret

May 15, 2025

The U S Nuclear Base Under Greenlands Ice A Decades Long Secret

May 15, 2025 -

The Ongoing Battle Car Dealers Vs Ev Mandates

May 15, 2025

The Ongoing Battle Car Dealers Vs Ev Mandates

May 15, 2025 -

Microsoft Layoffs Reasons Impact And Employee Support

May 15, 2025

Microsoft Layoffs Reasons Impact And Employee Support

May 15, 2025 -

Predicting The Top Baby Names For 2024

May 15, 2025

Predicting The Top Baby Names For 2024

May 15, 2025 -

Analysis Key Provisions Of The Gops Comprehensive Bill

May 15, 2025

Analysis Key Provisions Of The Gops Comprehensive Bill

May 15, 2025

Latest Posts

-

Padres Vs Pirates Expert Mlb Predictions And Betting Picks

May 15, 2025

Padres Vs Pirates Expert Mlb Predictions And Betting Picks

May 15, 2025 -

Rockies Vs Padres Can Colorado End Their Losing Streak

May 15, 2025

Rockies Vs Padres Can Colorado End Their Losing Streak

May 15, 2025 -

Mlb Betting Padres Vs Pirates Predictions And Best Odds

May 15, 2025

Mlb Betting Padres Vs Pirates Predictions And Best Odds

May 15, 2025 -

Optimism Grows Warriors Await Butlers Game 3 Decision

May 15, 2025

Optimism Grows Warriors Await Butlers Game 3 Decision

May 15, 2025 -

Todays Mlb Game Padres Vs Pirates Prediction And Betting Odds

May 15, 2025

Todays Mlb Game Padres Vs Pirates Prediction And Betting Odds

May 15, 2025