FTC Investigates OpenAI's ChatGPT: What It Means For AI

Table of Contents

The FTC's Concerns Regarding ChatGPT and AI's Ethical Implications

The FTC, mandated with protecting consumers from unfair or deceptive practices, has rightfully turned its attention to the ethical implications of powerful AI technologies like ChatGPT. Their investigation likely stems from a confluence of concerns surrounding data privacy, algorithmic bias, and the potential for the spread of misinformation.

Data Privacy and Security

ChatGPT, like other large language models (LLMs), relies on vast amounts of user data for training and operation. The FTC's concerns likely center on whether OpenAI's data collection and usage practices comply with existing regulations like the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA). Specific concerns might include:

- Lack of transparency in data usage: Users may not fully understand how their data is being collected, used, and stored.

- Potential for unauthorized data sharing: Concerns exist regarding the potential for sharing user data with third parties without explicit consent.

- Vulnerabilities to data breaches: The massive datasets used to train LLMs represent a significant target for cyberattacks, raising concerns about the security of sensitive user information.

Bias and Discrimination in AI Models

A significant ethical challenge with AI models like ChatGPT is the potential for bias and discrimination. Biases present in the training data can lead to AI systems perpetuating and even amplifying harmful stereotypes. This can manifest in several ways:

- Examples of biased outputs from ChatGPT: Instances of ChatGPT generating discriminatory or offensive responses have been documented, highlighting the need for improved bias mitigation techniques.

- The challenge of mitigating bias in large language models: Identifying and removing bias from massive datasets is a complex and ongoing challenge.

- The need for fairer and more equitable AI algorithms: Developing AI algorithms that are fair, equitable, and free from bias is crucial for responsible AI development.

Misinformation and the Spread of Falsehoods

ChatGPT's ability to generate human-quality text raises concerns about the potential for misuse in spreading misinformation and falsehoods. This poses a significant threat to public discourse and democratic processes:

- The difficulty in distinguishing AI-generated content from human-generated content: The sophistication of LLMs makes it increasingly challenging to identify AI-generated misinformation.

- The potential impact on public discourse and democratic processes: The spread of AI-generated misinformation can undermine trust in institutions and influence public opinion.

- The role of AI developers in mitigating the spread of misinformation: AI developers have a responsibility to implement safeguards to prevent their technologies from being used to spread falsehoods.

OpenAI's Response and Future Actions

OpenAI has acknowledged the FTC's investigation and has publicly committed to responsible AI development. The company's response will likely involve significant changes to its practices, including:

- Increased transparency in data handling: OpenAI may implement more transparent data collection and usage policies to better inform users about how their data is being handled.

- Improved methods for bias detection and mitigation: The company will likely invest in more sophisticated techniques to identify and mitigate bias in its models.

- Implementation of stricter safeguards against the spread of misinformation: OpenAI may explore methods to detect and prevent the generation of false or misleading information by its models.

The Broader Implications for the AI Industry

The FTC's investigation into OpenAI's ChatGPT has far-reaching implications for the entire AI industry. It signifies a growing recognition of the need for responsible AI development and robust regulatory frameworks. The investigation's outcome could lead to:

- Increased scrutiny of AI algorithms: Other AI companies can expect increased scrutiny of their algorithms and data handling practices.

- Potential for stricter regulations on data privacy and security: The investigation may lead to stricter regulations concerning data privacy and security for AI systems.

- The development of industry-wide best practices for responsible AI: The investigation could spur the development of industry-wide ethical guidelines and best practices for responsible AI development.

Conclusion: Navigating the Future of AI After the FTC's ChatGPT Investigation

The FTC's investigation into OpenAI's ChatGPT highlights the critical need for responsible AI development and ethical considerations. The outcome will significantly shape the future of AI regulation and the industry's approach to data privacy, algorithmic bias, and the spread of misinformation. To navigate this evolving landscape, it's crucial to stay informed about the developments in AI regulation and the ongoing FTC investigation of OpenAI's ChatGPT. Learn more about the implications of the FTC's investigation into AI and engage in further research on responsible AI development and ethical considerations. Follow the FTC's investigation of OpenAI's ChatGPT closely to understand the future of this transformative technology.

Featured Posts

-

Mark Carney And Donald Trump White House Meeting Scheduled

May 05, 2025

Mark Carney And Donald Trump White House Meeting Scheduled

May 05, 2025 -

Electric Motor Innovation A Path To China Independent Supply Chains

May 05, 2025

Electric Motor Innovation A Path To China Independent Supply Chains

May 05, 2025 -

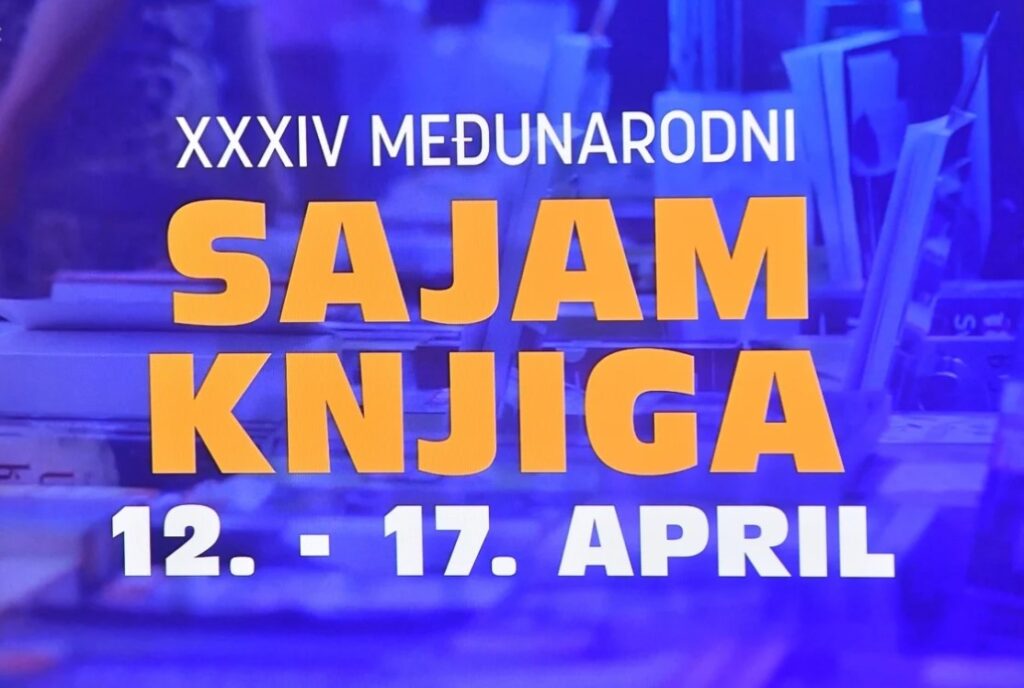

Gibonni Promovira Knjigu Na Sarajevskom Sajmu Knjiga

May 05, 2025

Gibonni Promovira Knjigu Na Sarajevskom Sajmu Knjiga

May 05, 2025 -

Tioga Downs 2025 A Look Ahead At The Upcoming Racing Season

May 05, 2025

Tioga Downs 2025 A Look Ahead At The Upcoming Racing Season

May 05, 2025 -

Ray Epps Sues Fox News For Defamation Details Of The Jan 6 Lawsuit

May 05, 2025

Ray Epps Sues Fox News For Defamation Details Of The Jan 6 Lawsuit

May 05, 2025