Mass Shootings And Algorithmic Radicalization: Examining Corporate Liability

Table of Contents

The Role of Social Media Algorithms in Radicalization

Social media algorithms, designed to maximize user engagement, often inadvertently amplify extremist content and create echo chambers. These algorithms, driven by complex machine learning, prioritize content that elicits strong emotional responses, regardless of its nature. This means that inflammatory, hateful, or violent material can be disproportionately boosted, reaching a far wider audience than it would through organic means.

- Targeted Advertising: These algorithms also enable highly targeted advertising, allowing extremist groups to precisely reach vulnerable individuals predisposed to their ideologies through sophisticated profiling. This targeted approach significantly increases the effectiveness of radicalization efforts.

- Examples of Algorithmic Amplification:

- Facebook's newsfeed algorithm has been criticized for promoting divisive and misleading content, contributing to the spread of misinformation and conspiracy theories linked to extremist groups.

- YouTube's recommendation system has been shown to lead users down a "rabbit hole" of increasingly extreme videos, exposing them to radicalizing content they might not have otherwise encountered.

- Studies Linking Algorithms to Radicalization: Numerous studies have shown a direct correlation between increased exposure to extremist content through social media algorithms and an increase in radicalization among users. These studies highlight the significant role algorithms play in shaping online environments conducive to violence.

- Filter Bubbles and Echo Chambers: Algorithms create "filter bubbles," limiting users' exposure to diverse perspectives and reinforcing their existing beliefs. This phenomenon, combined with echo chambers where like-minded individuals reinforce each other's biases, significantly increases the risk of radicalization.

The Business Model and its Contribution

The business models of major social media platforms are fundamentally reliant on user engagement. Metrics like "time spent on platform" and "click-through rates" directly impact advertising revenue. This prioritization of engagement often inadvertently leads to a prioritization of content that is sensational, controversial, and even harmful. The algorithms are thus incentivized to amplify content, regardless of whether it's factually accurate, promotes violence, or incites hatred.

- Engagement Over Safety: Examples abound of companies prioritizing engagement metrics over the safety and well-being of their users. The spread of misinformation and hate speech, while detrimental to society, often generates high engagement, making it difficult for companies to effectively curb.

- Ethical Considerations: The ethical question is stark: Should maximizing profits come at the expense of public safety? The potential for algorithmic radicalization to contribute to real-world violence raises serious ethical concerns for these companies and demands a re-evaluation of their priorities.

- Ineffective Content Moderation: Current content moderation strategies often struggle to keep pace with the volume and sophistication of extremist content. The sheer scale of user-generated content makes manual moderation impractical, and automated systems often struggle to effectively identify and remove harmful material.

Legal and Ethical Frameworks for Corporate Accountability

Existing legal frameworks surrounding online hate speech and incitement to violence are often inadequate to address the complexities of algorithmic radicalization. Laws vary significantly across jurisdictions, and the transnational nature of online platforms makes enforcement challenging.

- Current Laws and Limitations: Current laws often focus on individual actors rather than the platforms that facilitate the spread of harmful content. The challenge lies in proving direct causation between a company's algorithm and a specific act of violence.

- Potential New Legislation: There is growing momentum to develop new legislation and regulatory frameworks that specifically address the role of algorithms in fostering extremist ideologies. This includes proposals for increased transparency in algorithmic processes and stronger content moderation policies.

- Ethical Considerations Beyond Legal Frameworks: Even beyond existing or future legislation, tech companies bear a significant ethical responsibility to mitigate the risks associated with algorithmic radicalization. Proactive measures, including algorithmic redesign and improved content moderation, are crucial.

Case Studies and Examples

Several real-world examples highlight the potential link between algorithmic radicalization and mass shootings. While establishing direct causation remains complex, the patterns are concerning.

- Case Study 1: [Insert a detailed case study with links to credible sources, analyzing the role of social media algorithms in a specific mass shooting].

- Case Study 2: [Insert a second detailed case study, again focusing on algorithmic influence and providing links to reliable sources].

- Analysis of Algorithmic Roles: In each case, a careful analysis of the platforms used by the perpetrators, the type of content they engaged with, and the role played by algorithms in directing them toward extremist material is vital for understanding the potential contribution of these systems to violence. The lack of robust accountability in these instances underscores the urgent need for corporate responsibility.

Conclusion: Mass Shootings and Algorithmic Radicalization: A Call for Action

The evidence strongly suggests a connection between algorithmic radicalization, corporate practices, and the tragic rise in mass shootings. The prioritization of engagement over safety by social media companies has inadvertently created environments conducive to the spread of extremist ideologies. This necessitates increased corporate accountability and stronger regulatory frameworks.

We must demand stricter regulations on tech companies to prevent future tragedies stemming from mass shootings and algorithmic radicalization. Contact your representatives, advocate for policy changes that hold tech companies responsible for the content amplified by their algorithms, and support research into algorithmic transparency and ethical AI development. Understanding the intricate interplay between technology, extremism, and violence is critical to addressing this urgent challenge.

For further reading and resources on mass shootings and algorithmic radicalization, please visit [insert relevant links to credible resources].

Featured Posts

-

Four Exclusive Gorillaz Live Shows This September Everything You Need To Know

May 30, 2025

Four Exclusive Gorillaz Live Shows This September Everything You Need To Know

May 30, 2025 -

The Role Of Algorithms In Mass Shooter Radicalization A Legal And Ethical Inquiry

May 30, 2025

The Role Of Algorithms In Mass Shooter Radicalization A Legal And Ethical Inquiry

May 30, 2025 -

Un Hearing Emotional Testimony On Gaza Childrens Suffering

May 30, 2025

Un Hearing Emotional Testimony On Gaza Childrens Suffering

May 30, 2025 -

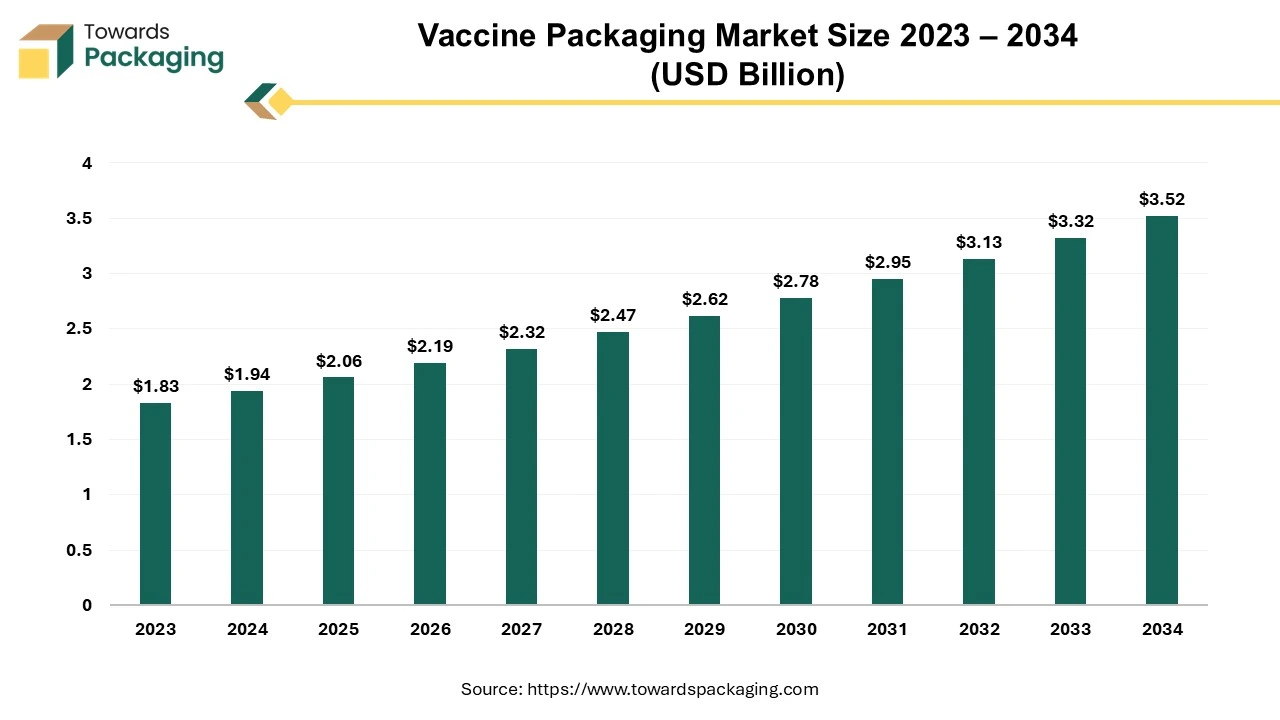

Vaccine Packaging Market A Rapidly Expanding Industry

May 30, 2025

Vaccine Packaging Market A Rapidly Expanding Industry

May 30, 2025 -

Ufc Heavyweight Title Fight Pimbletts Surprise Prediction For Jones Vs Aspinall

May 30, 2025

Ufc Heavyweight Title Fight Pimbletts Surprise Prediction For Jones Vs Aspinall

May 30, 2025