Nonprofit Control Ensured For OpenAI: Implications And Analysis

Table of Contents

The Original Vision: OpenAI as a Nonprofit

OpenAI's founding in 2015 marked a bold attempt to steer AI development away from solely profit-driven motives. Its initial structure, a capped-profit company, aimed to prevent any single entity from dominating its direction. This innovative approach prioritized societal benefit over profit maximization, a key differentiator from many for-profit AI companies.

The benefits of this nonprofit structure were manifold:

- Focus on societal benefit: The primary goal was to advance AI in a way that benefits humanity, not just shareholders. This meant exploring ethical considerations and mitigating potential risks proactively.

- Reduced risk of biased or malicious AI: A nonprofit structure, theoretically, minimized the incentives for developing biased or harmful AI applications driven by commercial pressures.

- Increased transparency and accountability: A commitment to transparency and openness was integral to the original vision, promoting public scrutiny and accountability in AI development.

Key figures like Elon Musk, Sam Altman, and others played crucial roles in establishing this groundbreaking structure, emphasizing the importance of responsible AI development from the outset. The early emphasis was on open-source research and collaboration, furthering the goals of a shared, ethical advancement of AI.

Challenges to Maintaining Nonprofit Control

Despite the noble intentions, maintaining nonprofit control of OpenAI has proven challenging. The inherent tension between its nonprofit goals and the need for substantial funding has created significant obstacles.

- Talent Acquisition and Retention: Attracting and retaining top AI talent in a highly competitive market requires significant financial resources, potentially conflicting with a purely nonprofit model.

- Resource Intensive Research: AI research demands vast computational resources and substantial infrastructure investments – costs that can strain a nonprofit's budget.

- Investor Pressure: The need for substantial funding opens the door to investor pressure, potentially compromising the original nonprofit vision in favor of maximizing returns.

The shift from a pure nonprofit to a capped-profit structure exemplifies this tension. While it allows for greater fundraising capacity, it also introduces the potential for diluted nonprofit control and a shift in priorities. The risk of losing the original vision includes:

- Profit over societal good: The pursuit of profit could lead to prioritizing commercially viable projects over those with the greatest societal benefit.

- Increased commercial influence: Significant investment from for-profit entities could lead to a greater focus on commercial applications and a decline in research focused on public good.

- Reduced transparency and accountability: A shift towards a more commercially driven model might compromise transparency and public accountability, hindering oversight.

Mechanisms for Ensuring Ongoing Nonprofit Influence

To mitigate these risks, OpenAI has implemented several mechanisms aimed at maintaining nonprofit oversight and influence:

- Board of Directors: The composition and authority of the board of directors are crucial for ensuring that the nonprofit mission remains central.

- Governance Structures: Strong governance structures, including clear accountability mechanisms and ethical guidelines, are essential to guide decision-making.

- Legal Agreements: Specific legal agreements and contracts are in place to safeguard the original nonprofit mission and protect against undue commercial influence.

However, the effectiveness of these mechanisms remains a subject of ongoing debate, especially in light of the company's evolution. Alternative structures, such as stronger independent oversight bodies or more robust public accountability measures, could be explored to further strengthen nonprofit control of OpenAI.

Broader Implications for AI Governance

The organizational structure of OpenAI and its challenges have significant implications for the broader governance of AI technologies. The experience highlights the critical role of nonprofit organizations in shaping the ethical and societal implications of AI.

- Responsible AI Development: OpenAI's model, despite its challenges, serves as a valuable case study for other organizations aiming to develop AI responsibly and ethically.

- Future of AI Research: The long-term impact of OpenAI's approach on the future of AI research and development is still unfolding and warrants close attention.

- Public-Private Partnerships: The challenges faced by OpenAI underscore the need for innovative models of public-private partnerships to fund and govern responsible AI development.

Conclusion

Maintaining nonprofit control of OpenAI presents a significant challenge, highlighting the inherent tension between the need for substantial funding and the commitment to a societal benefit-driven mission. While mechanisms are in place to ensure ongoing nonprofit influence, their effectiveness remains a topic requiring continued monitoring and potential improvements. Upholding the original vision of OpenAI as a force for societal good is crucial, not only for its future but also for shaping the responsible development of AI globally. We need further discussion and research on the topic of nonprofit control of OpenAI, responsible AI development, and the effectiveness of various governance models. Participate in relevant discussions or contribute to organizations working towards responsible AI – the future of AI hinges on our collective commitment to its ethical and beneficial application.

Featured Posts

-

The Young And The Restless February 11th Recap Nicks Confrontation With Victor

May 07, 2025

The Young And The Restless February 11th Recap Nicks Confrontation With Victor

May 07, 2025 -

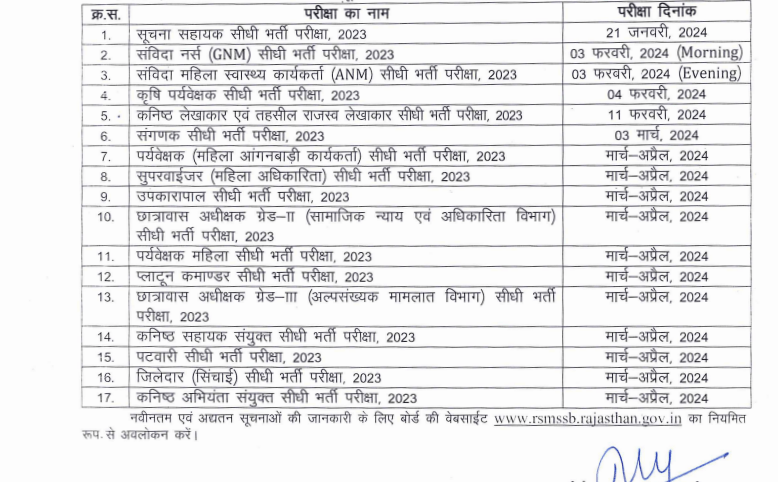

Check The Latest Rsmssb Exam Calendar For 2025 26

May 07, 2025

Check The Latest Rsmssb Exam Calendar For 2025 26

May 07, 2025 -

Continued Success For Lewis Capaldis Latest Album

May 07, 2025

Continued Success For Lewis Capaldis Latest Album

May 07, 2025 -

Anthony Edwards And Barack Obama A Conversation On Leadership

May 07, 2025

Anthony Edwards And Barack Obama A Conversation On Leadership

May 07, 2025 -

John Wick 5 Should We Even Want Another Sequel

May 07, 2025

John Wick 5 Should We Even Want Another Sequel

May 07, 2025