Responsible AI: Acknowledging And Addressing AI's Learning Constraints

Table of Contents

Data Bias and its Impact on AI Learning

AI models learn from data, and if that data is biased, the resulting AI system will likely be biased as well. This is a critical concern in the pursuit of Responsible AI.

Sources of Bias in Training Data

Biased datasets, reflecting existing societal inequalities related to gender, race, socioeconomic status, and other factors, lead to AI outputs that perpetuate and even amplify these biases. For example, facial recognition systems trained primarily on images of white faces often perform poorly on individuals with darker skin tones.

- Insufficient data representation: Lack of diverse representation in the training data leads to models that generalize poorly to underrepresented groups.

- Historical biases embedded in data: Data often reflects historical societal biases, which are then learned and reproduced by the AI.

- Sampling errors: Non-random or biased sampling methods can introduce systematic errors in the training data.

Mitigating Data Bias

Addressing data bias requires a multi-faceted approach. Techniques for identifying and reducing bias include:

- Data augmentation: Artificially increasing the representation of underrepresented groups in the dataset.

- Careful data collection: Employing rigorous data collection methods to ensure diversity and reduce bias from the outset.

- Data preprocessing techniques: Applying algorithms to identify and mitigate bias in existing datasets.

- Algorithmic fairness approaches: Developing algorithms that are explicitly designed to be fair and unbiased.

- Ongoing monitoring and evaluation: Continuously assessing the performance of AI systems across different demographic groups to identify and address persistent biases. This is crucial for Responsible AI development.

The Limits of Generalization in AI Models

Even with unbiased data, AI models have inherent limitations in their ability to generalize to new, unseen situations.

Overfitting and Underfitting

- Overfitting: Occurs when a model learns the training data too well, including its noise and outliers, resulting in poor performance on new data. Imagine a student memorizing answers instead of understanding concepts.

- Underfitting: Happens when a model is too simplistic to capture the underlying patterns in the data, leading to poor performance on both training and new data. This is like a student not learning enough material to do well on a test.

To mitigate these issues:

- Regularization techniques: Methods to prevent overfitting by penalizing overly complex models.

- Cross-validation: Techniques to evaluate model performance on unseen data and estimate generalization ability.

- Appropriate model complexity: Choosing a model that is complex enough to capture patterns but not so complex as to overfit.

The Problem of Out-of-Distribution Data

AI models struggle when encountering data significantly different from their training data (out-of-distribution data). This is a significant challenge for deploying AI in real-world settings where unexpected situations are common.

- Robustness testing: Rigorous testing to evaluate model performance on a wide range of inputs, including those outside the training distribution.

- Domain adaptation techniques: Methods to adapt a model trained on one dataset to perform well on a different but related dataset.

- Continuous learning: Enabling AI systems to learn and adapt from new data continuously, improving their robustness and generalization capabilities over time.

Interpretability and Explainability in AI

Many sophisticated AI models, particularly deep learning models, are often referred to as "black boxes" because their decision-making processes are opaque and difficult to understand.

The "Black Box" Problem

The lack of transparency in complex AI models poses significant ethical and practical challenges:

- Lack of transparency: It is difficult to understand how the model arrives at its decisions.

- Difficulty in debugging: Identifying and correcting errors in complex models is challenging.

- Potential for unintended consequences: Unforeseen biases or errors can have significant consequences without understanding the model's internal workings.

Methods for Enhancing Explainability

Several techniques are being developed to enhance the explainability of AI models:

- LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations): Methods to explain individual predictions by approximating the model locally.

- Feature importance analysis: Identifying the features that most strongly influence the model's predictions.

- Model visualization techniques: Creating visualizations to help understand the internal workings of the model.

- Rule-based explanation methods: Extracting simple rules from the model to explain its decisions.

- Human-in-the-loop systems: Incorporating human oversight and feedback into the AI decision-making process.

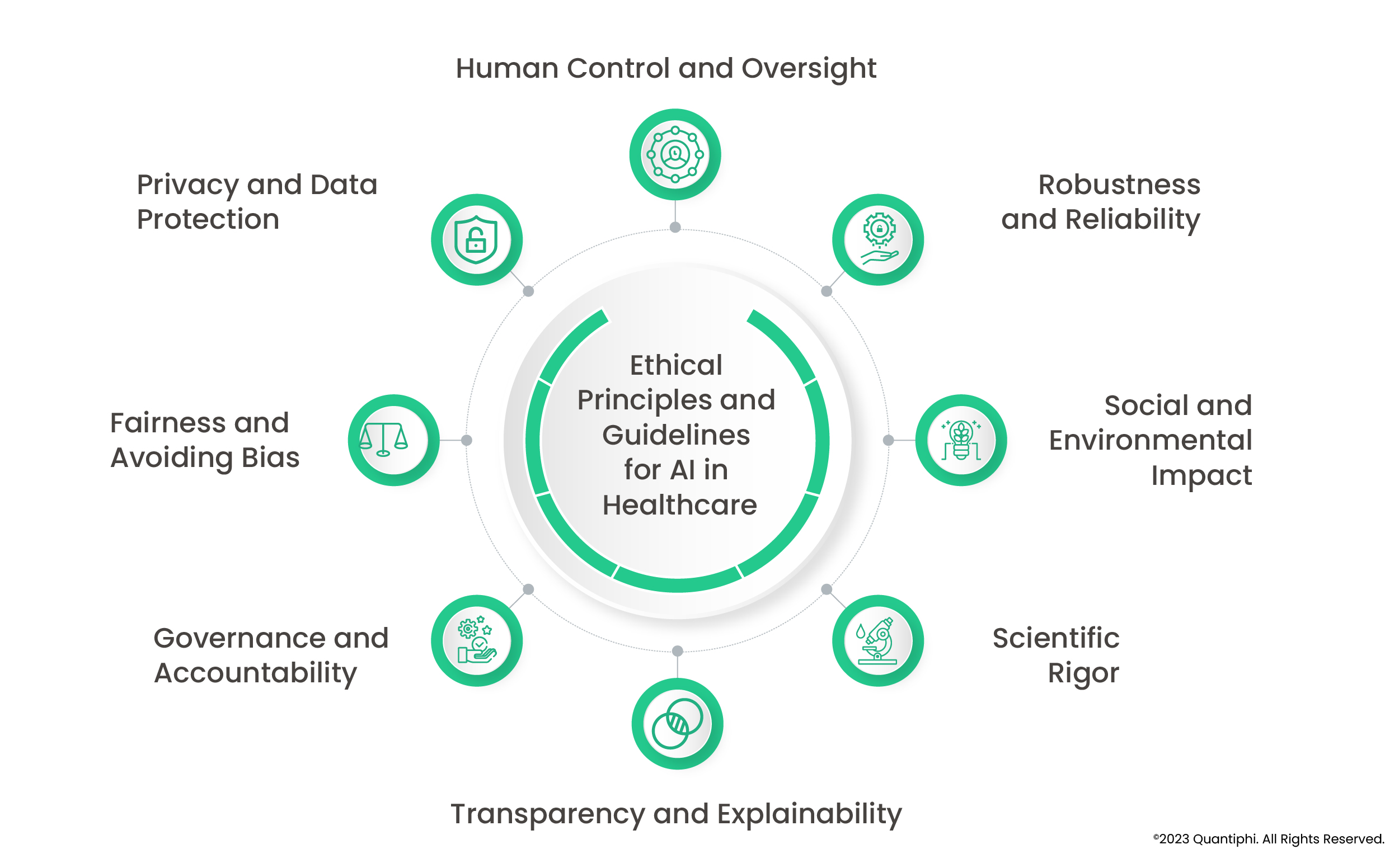

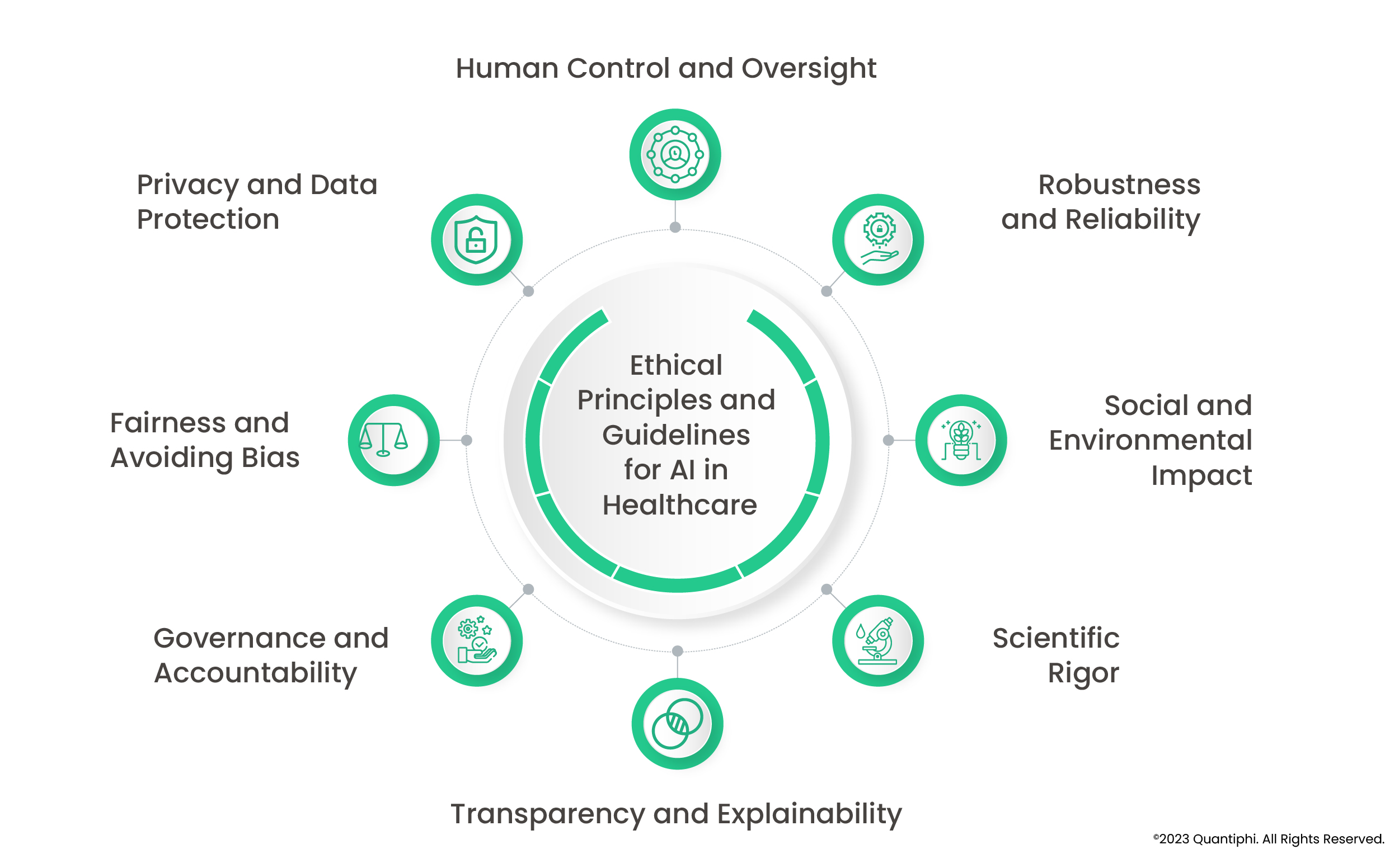

Addressing the Ethical Concerns of Responsible AI

Developing Responsible AI requires careful consideration of the ethical implications of these technologies.

Accountability and Responsibility

- Establishing clear lines of accountability: Determining who is responsible when AI systems make mistakes or cause harm.

- Designing for safety and security: Building AI systems with robust safety and security mechanisms to prevent unintended consequences.

- Ethical review boards: Implementing processes for ethical review and oversight of AI development and deployment.

Fairness and Bias Mitigation

Fairness and equity must be central to AI development and deployment. This requires:

- Regular audits: Periodic assessments of AI systems to identify and address biases.

- Bias detection tools: Developing tools to automatically detect and measure bias in AI systems.

- Continuous improvement processes: Implementing mechanisms for ongoing monitoring and improvement of AI systems to ensure fairness and reduce bias.

Conclusion

Developing truly Responsible AI requires a proactive approach to understanding and addressing the inherent learning constraints of AI systems. By acknowledging issues like data bias, limited generalization, and the "black box" problem, and by implementing mitigation strategies focusing on explainability, ethical considerations, and ongoing monitoring, we can build AI systems that are not only powerful but also safe, fair, and trustworthy. Let's work together to ensure the future of AI is shaped by principles of Responsible AI. Learn more about creating ethical and robust AI systems by exploring further resources on [link to relevant resources].

Featured Posts

-

Foreign Student Ban On Harvard Extended A Judges Decision

May 31, 2025

Foreign Student Ban On Harvard Extended A Judges Decision

May 31, 2025 -

Matthew Sexton Pleads Guilty To Animal Pornography Charges

May 31, 2025

Matthew Sexton Pleads Guilty To Animal Pornography Charges

May 31, 2025 -

Djokovic In Tarihi Zaferi Nadal In Rekorunu Kirma Hikayesi

May 31, 2025

Djokovic In Tarihi Zaferi Nadal In Rekorunu Kirma Hikayesi

May 31, 2025 -

L Etoile De Mer Et Le Droit Du Vivant Une Question De Justice Environnementale

May 31, 2025

L Etoile De Mer Et Le Droit Du Vivant Une Question De Justice Environnementale

May 31, 2025 -

Achieving The Good Life Steps To Happiness And Fulfillment

May 31, 2025

Achieving The Good Life Steps To Happiness And Fulfillment

May 31, 2025