The Dangers Of AI Therapy In A Surveillance Society

Table of Contents

H2: Data Privacy and Security Risks in AI Therapy

AI therapy platforms collect vast amounts of sensitive personal data, including intimate details about users' mental health, relationships, and life experiences. This data presents a tempting target for malicious actors, leading to several significant risks.

H3: Data breaches and unauthorized access: The vulnerability of this sensitive information is a major concern. AI therapy platforms, like any digital system, are susceptible to data breaches.

- Examples of past data breaches: Consider the numerous data breaches experienced by healthcare providers and other organizations holding sensitive personal information. The consequences can be devastating.

- Potential consequences of a breach in AI therapy: A breach could lead to identity theft, blackmail, reputational damage, and the exploitation of vulnerable individuals.

- Lack of robust data encryption and security protocols: Unfortunately, not all AI therapy platforms employ robust data encryption and security protocols, increasing the risk of unauthorized access.

H3: Data usage and potential for misuse: Even without a breach, the potential for misuse of collected data is significant. Data gathered during AI therapy sessions could be used for purposes far beyond its intended therapeutic application.

- Examples of how data can be used for manipulative purposes: Data could be used to create detailed psychological profiles, enabling targeted advertising, manipulative marketing strategies, or even social engineering.

- Lack of transparency in data usage policies: Many AI therapy platforms lack transparency regarding how user data is collected, stored, and utilized, raising serious ethical concerns.

- The potential for algorithmic bias leading to unfair or discriminatory outcomes: Algorithms trained on biased datasets can perpetuate and amplify existing societal inequalities.

H3: Lack of Regulatory Oversight: The rapid development of AI therapy has outpaced the establishment of robust regulatory frameworks. This lack of clear guidelines and oversight creates a significant vulnerability.

- Examples of weak or absent regulations in different countries: The regulatory landscape varies widely across jurisdictions, with some countries having minimal or no regulations governing the use of AI in mental health.

- Calls for stricter legislation: There is an urgent need for stricter legislation to protect users' data and ensure ethical AI therapy practices.

- The need for independent audits of AI therapy platforms: Regular, independent audits of AI therapy platforms are crucial to assess their security measures, data usage policies, and algorithmic fairness.

H2: The Erosion of Therapeutic Trust and the Doctor-Patient Relationship

The shift towards AI-driven mental health solutions raises serious questions about the core elements of effective therapy: trust, empathy, and the human connection between therapist and patient.

H3: Algorithmic bias and impersonal interactions: AI systems, reliant on algorithms and data sets, are susceptible to biases that can lead to flawed diagnoses and inappropriate treatment plans.

- Examples of biases embedded in datasets: Datasets used to train AI algorithms may reflect existing societal biases, leading to discriminatory outcomes for certain groups.

- Difficulties in addressing unique human situations with a standardized algorithmic approach: AI struggles to account for the nuances of individual experiences and complex emotional states.

- Lack of empathy and human connection in AI therapy: AI lacks the capacity for genuine empathy and the ability to form the trusting therapeutic relationship crucial for successful treatment.

H3: Reduced human interaction and diminished empathy: Replacing human therapists with AI risks diminishing the vital human element of therapy.

- The importance of human connection in mental health treatment: The therapeutic relationship is built on trust, empathy, and understanding—qualities that are challenging for AI to replicate.

- The limitations of AI in understanding nuanced emotional states: AI struggles to interpret subtle cues and understand the complexities of human emotions.

- The potential for patients to feel isolated and misunderstood: Over-reliance on AI may leave patients feeling isolated, misunderstood, and even more vulnerable.

H3: Dependence and deskilling of human therapists: Over-reliance on AI therapy could lead to a decline in the skills and expertise of human mental health professionals.

- The risk of deskilling due to over-dependence on AI tools: Reduced human interaction and oversight may diminish the skills and critical thinking abilities of therapists.

- The importance of ongoing human supervision and intervention: A balanced approach integrating AI and human expertise is crucial to ensure patient safety and effective treatment.

- The need for a balanced approach integrating AI and human expertise: AI can be a valuable tool to augment, not replace, human therapists.

H2: The Surveillance State and AI Therapy

The integration of AI therapy into a surveillance society raises profound ethical and societal concerns.

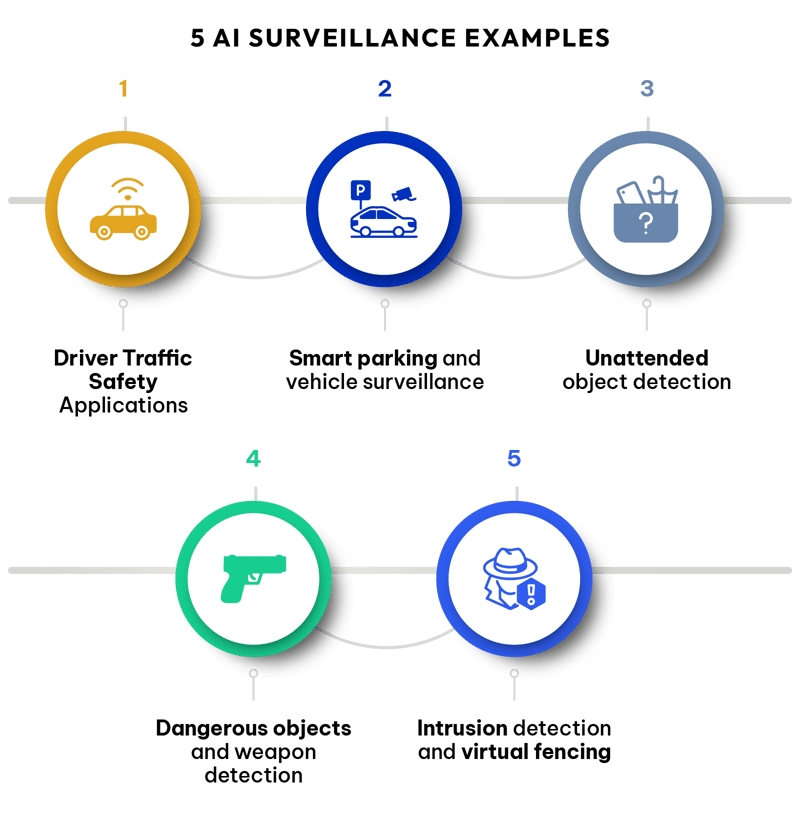

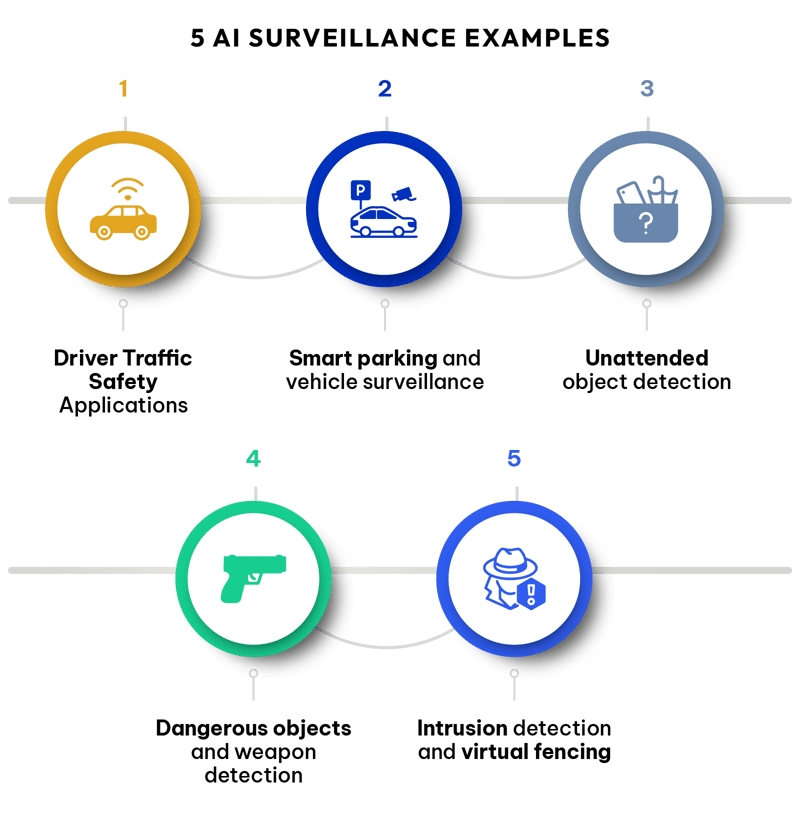

H3: Integration with other surveillance systems: AI therapy data could potentially be accessed by government agencies or law enforcement, blurring the lines between healthcare and surveillance.

- Potential for integration with law enforcement databases: Data from AI therapy platforms could be used for predictive policing or other law enforcement purposes, raising serious privacy concerns.

- Concerns about national security applications: Governments may seek to leverage AI therapy data for national security purposes, leading to widespread surveillance and potential abuses of power.

- The risk of profiling and discrimination based on mental health information: Data from AI therapy could be used to profile and discriminate against individuals based on their mental health status.

H3: Manipulation and control through data: Data collected from AI therapy sessions could be used to influence or control individuals' thoughts and behaviors.

- Examples of manipulative advertising techniques: Detailed psychological profiles derived from AI therapy data could be used to create highly targeted and manipulative advertising campaigns.

- The risk of psychological manipulation through personalized AI interventions: AI could be used to deliver personalized messages designed to influence individuals' beliefs and actions.

- Ethical concerns about using data to exert social control: The potential for using AI therapy data to exert social control raises profound ethical concerns.

H3: The chilling effect on free speech and self-expression: The knowledge of being monitored may discourage individuals from openly expressing themselves during AI therapy sessions, hindering the therapeutic process.

- The importance of open and honest communication in therapy: Open and honest communication is essential for effective therapy, and surveillance can inhibit this.

- The potential for self-censorship due to surveillance: Individuals may self-censor their thoughts and feelings to avoid potential repercussions.

- The impact on trust and therapeutic alliance: Surveillance undermines the trust and rapport essential for a successful therapeutic relationship.

3. Conclusion:

The integration of AI into mental healthcare offers potential benefits, but it also presents significant risks to individual privacy and well-being within the context of a surveillance society. We've explored the potential for data breaches, the erosion of therapeutic trust, and the chilling effect on self-expression. The potential for misuse of data for manipulative purposes or integration with broader surveillance systems is alarming. The future of AI Therapy and the Surveillance Society are inextricably linked. We must act responsibly. We urgently need stronger regulations, greater transparency, and a renewed focus on human-centered approaches to mental health care that prioritize privacy and protect individual autonomy. Learn more about the risks of AI therapy in a surveillance society, and advocate for responsible development and deployment of these technologies. Support initiatives promoting ethical and privacy-preserving mental health practices. The time to act is now.

Featured Posts

-

Dodgers Masterplan In Jeopardy Padres Defiance

May 16, 2025

Dodgers Masterplan In Jeopardy Padres Defiance

May 16, 2025 -

La Liga President Tebas Counters Ancelottis Madrid Fixture Concerns

May 16, 2025

La Liga President Tebas Counters Ancelottis Madrid Fixture Concerns

May 16, 2025 -

Mlb Dfs Strategy May 8th Sleeper Picks And A Hitter To Fade

May 16, 2025

Mlb Dfs Strategy May 8th Sleeper Picks And A Hitter To Fade

May 16, 2025 -

The Amber Heard Elon Musk Twins Fact Or Fiction A Comprehensive Analysis

May 16, 2025

The Amber Heard Elon Musk Twins Fact Or Fiction A Comprehensive Analysis

May 16, 2025 -

Where To Watch The Padres In 2025 Full Broadcast Schedule

May 16, 2025

Where To Watch The Padres In 2025 Full Broadcast Schedule

May 16, 2025