The FTC's OpenAI Investigation: A Deep Dive Into ChatGPT's Practices

Table of Contents

Main Points:

2.1 Data Privacy Concerns in ChatGPT and the FTC Investigation:

H3: Data Collection and Usage:

OpenAI's data collection practices surrounding ChatGPT are a central focus of the FTC's investigation. The sheer volume of data collected raises significant data privacy concerns.

- What data is collected? ChatGPT collects user prompts, generated responses, and user interaction data, including browsing history if integrated with a browser. This data can potentially include sensitive personal information, depending on the user's queries.

- How is data stored? The exact storage methods and security measures employed by OpenAI are subject to ongoing scrutiny. The potential for data breaches and unauthorized access to sensitive user information is a major concern.

- Potential Risks: Data breaches could expose users to identity theft, financial fraud, and reputational damage. The potential misuse of collected data is another critical risk, especially considering the volume and variety of information gathered. Related keywords: data security, user privacy, GDPR, CCPA, data breaches.

H3: Consent and Transparency:

A key aspect of the FTC's investigation revolves around whether OpenAI obtains informed consent from users and maintains sufficient transparency regarding its data practices.

- User Agreements and Data Policies: The clarity and comprehensibility of OpenAI's user agreements and data policies are under examination. Critics argue that the language may be overly complex, making it difficult for users to understand how their data is collected, used, and protected.

- Informed Consent: The question of whether users truly understand the implications of using ChatGPT and providing their data is crucial. The extent to which users actively consent to the data collection practices is under scrutiny.

- Lack of Transparency: Concerns exist about the lack of transparency regarding OpenAI's algorithms, data handling processes, and data security measures. Improved transparency is essential to build user trust and ensure accountability. Related keywords: informed consent, data protection, transparency, accountability.

H3: Potential for Misinformation and Bias:

The data used to train ChatGPT, sourced from the vast expanse of the internet, inevitably contains biases and misinformation. The FTC's investigation is examining how these biases might be perpetuated and amplified by the model.

- Biased Outputs: Examples of ChatGPT generating biased or discriminatory outputs have been documented, highlighting the need for robust mitigation strategies.

- Misinformation Spread: The potential for ChatGPT to generate and disseminate misinformation is a serious concern. The model's ability to convincingly produce false information poses risks to public discourse and trust.

- Mitigation Efforts: OpenAI has implemented some efforts to address bias and misinformation, but their effectiveness is being evaluated as part of the FTC's investigation. Related keywords: algorithmic bias, misinformation, disinformation, AI ethics.

2.2 FTC's Focus on Consumer Protection and Unfair Practices:

H3: Deceptive Marketing and Misrepresentation:

The FTC is also investigating whether OpenAI's marketing materials accurately represent ChatGPT's capabilities and limitations.

- Exaggerated Claims: Concerns have been raised about potential overstatements of ChatGPT's accuracy, reliability, and overall performance.

- Misleading Information: The FTC will likely examine whether OpenAI's marketing materials adequately disclose the potential risks and limitations associated with using ChatGPT.

- Consumer Protection: The investigation aims to determine whether OpenAI's marketing practices violate consumer protection laws and create unfair or deceptive trade practices. Related keywords: deceptive advertising, consumer protection, unfair trade practices.

H3: Liability and Accountability for Harmful Outputs:

A critical aspect of the FTC's investigation is the issue of liability and accountability for harmful or offensive content generated by ChatGPT.

- Legal and Ethical Considerations: Determining who is responsible when ChatGPT produces harmful content—OpenAI, the users, or both—is a complex legal and ethical challenge.

- AI Responsibility: The investigation could establish important precedents for assigning responsibility and liability in the context of AI-generated content.

- Need for Legal Frameworks: The investigation underscores the need for clearer legal frameworks to address the unique challenges of AI liability. Related keywords: AI responsibility, product liability, legal frameworks, AI regulation.

2.3 The Broader Implications of the FTC's Investigation:

H3: Precedent for Future AI Regulation:

The FTC's OpenAI investigation is setting a precedent for how regulatory bodies will approach the governance of AI companies.

- Impact on Other AI Developers: The outcome will influence how other AI developers design, deploy, and market their products.

- Shaping AI Regulation: The investigation is likely to contribute significantly to the development of future regulations and ethical guidelines for the AI industry.

- Government Oversight: The investigation signals a growing trend towards increased government oversight and regulation in the field of AI. Related keywords: AI regulation, AI ethics guidelines, government oversight, AI safety.

H3: Impact on Innovation and Development:

While necessary for protecting consumers and promoting ethical AI, the investigation could also impact the pace of innovation.

- Balancing Innovation and Risk: Striking a balance between fostering AI innovation and mitigating its risks is a critical challenge for regulators.

- Responsible AI Development: The investigation could incentivize AI developers to prioritize responsible AI development practices, enhancing user trust and promoting ethical outcomes.

- Stifling Innovation?: Concerns exist about the potential for overly stringent regulations to stifle innovation and slow the progress of AI development. Related keywords: AI innovation, responsible AI, ethical AI, AI development.

Conclusion: The FTC's OpenAI Investigation and the Future of Responsible AI

The FTC's OpenAI investigation is a pivotal moment in the development of AI regulation. The investigation highlights the critical need for responsible AI development, data protection, and consumer protection. The key takeaways emphasize the complexities of data privacy in AI systems, the need for transparency and informed consent, and the urgent requirement for clear legal frameworks to address AI liability.

Follow the FTC's OpenAI investigation closely. Stay updated on developments in responsible AI and learn more about the ethical implications of ChatGPT and similar technologies. The future of AI hinges on a proactive and collaborative approach to ensure its ethical and responsible use. The ongoing dialogue and measures taken now will shape the future of AI and how we harness its power for good.

Featured Posts

-

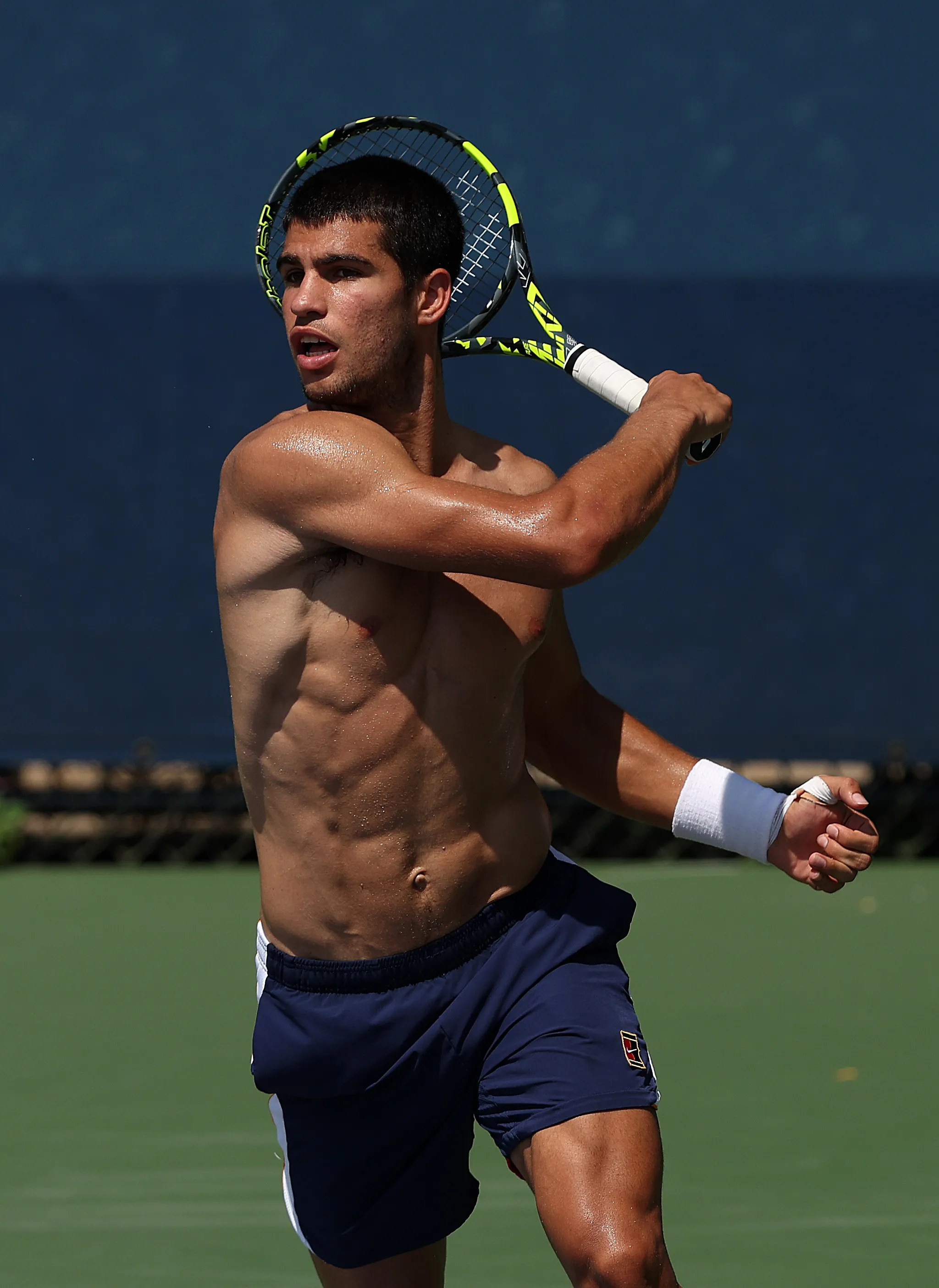

Alcarazs Comeback Winning The Monaco Title

May 30, 2025

Alcarazs Comeback Winning The Monaco Title

May 30, 2025 -

Manchester United Celebra O Talento De Bruno Fernandes

May 30, 2025

Manchester United Celebra O Talento De Bruno Fernandes

May 30, 2025 -

New Iowa Law Limits Cell Phones In Schools What Parents And Students Need To Know

May 30, 2025

New Iowa Law Limits Cell Phones In Schools What Parents And Students Need To Know

May 30, 2025 -

Carlos Alcarazs Monaco Victory A Hard Fought Triumph

May 30, 2025

Carlos Alcarazs Monaco Victory A Hard Fought Triumph

May 30, 2025 -

Legenda Tenisului Andre Agassi Juca Pickleball

May 30, 2025

Legenda Tenisului Andre Agassi Juca Pickleball

May 30, 2025