The Reality Of AI: Why It Doesn't Learn And What That Means For Users

Table of Contents

The Myth of True AI Learning

The term "AI learning" often creates a misleading impression of genuine understanding. Let's clarify the difference between machine learning and true learning. While AI excels at processing vast amounts of data and identifying patterns, it lacks the contextual understanding and cognitive flexibility that characterize human learning.

- AI systems operate on statistical probabilities, not genuine comprehension. They identify correlations and extrapolate based on these correlations, but they don't "grasp" the meaning in the same way a human would.

- They identify patterns in data but lack the contextual understanding humans possess. For example, an AI might correctly identify a cat in an image based on its features, but it wouldn't understand the concept of a cat beyond those visual features.

- "Learning" in AI typically refers to algorithmic improvement based on data input, not cognitive development. The algorithm adjusts its parameters to better fit the data, increasing its accuracy in specific tasks, but this doesn't equate to genuine understanding or learning in the human sense.

- AI failures frequently highlight this lack of contextual understanding. For example, an AI trained to identify pedestrians might misclassify a person in an unusual pose or clothing, leading to dangerous consequences.

How AI Systems "Learn": A Deep Dive into Algorithms

AI systems "learn" through various machine learning algorithms. Let's look at three common types:

- Supervised learning: This involves training an algorithm on a labeled dataset, where each data point is associated with a correct output. The algorithm learns to map inputs to outputs based on this labeled data. However, its ability to generalize beyond the training data is limited. Think of it like teaching a child to identify fruits by showing them pictures labelled "apple," "banana," etc. The child learns to associate the images with the labels, but might not recognize a fruit it hasn't seen before.

- Unsupervised learning: Here, the algorithm is presented with unlabeled data and tasked with finding patterns and structures within it. This is analogous to giving a child a box of mixed toys and asking them to group them based on similarities. While useful for discovering hidden patterns, unsupervised learning often lacks interpretability and can be susceptible to noise in the data.

- Reinforcement learning: This approach involves training an agent to interact with an environment and learn through trial and error. The agent receives rewards for desirable actions and penalties for undesirable ones. This is like training a dog with treats and reprimands. However, reinforcement learning can be computationally expensive and requires careful design of the reward function to avoid unintended consequences.

All these algorithms suffer from limitations. They struggle to extrapolate beyond the training data, making them vulnerable to unseen situations. Furthermore, biases present in the training data are often amplified in the resulting AI system, leading to unfair or discriminatory outcomes. The presence of bias in training data is a significant factor impacting the accuracy and reliability of AI learning.

The Implications for Users: Understanding the Limitations

Misunderstanding AI's limitations has significant consequences.

- Over-reliance on AI systems can lead to inaccurate or biased results. Users need to critically evaluate the output of AI systems, understanding that they are tools, not oracles.

- Users need to be critical consumers of AI-generated information. Don't blindly accept AI-generated content without considering its potential biases and limitations.

- The lack of true learning impacts various AI applications. For example, self-driving cars might struggle with unexpected situations, and AI-powered medical diagnosis might miss critical details due to limited contextual understanding.

- The ethical considerations of using AI systems that don't truly "learn" are substantial. Bias in algorithms can perpetuate and amplify existing societal inequalities. Responsible AI development and deployment require careful consideration of these ethical implications.

The Future of AI and the Pursuit of True Learning

Research continues in areas aiming to achieve more genuine AI learning, including:

- Neural networks: These are inspired by the structure and function of the human brain, but they still lack the symbolic reasoning and common-sense understanding of humans.

- Cognitive architectures: These attempt to model the human mind more comprehensively, integrating perception, memory, reasoning, and learning.

- Symbolic AI: This approach uses logical reasoning and symbolic representations to enable AI systems to handle more complex tasks.

However, creating truly intelligent systems remains an immense challenge. Bridging the gap between current AI capabilities and human-level intelligence requires significant advancements in computer science, neuroscience, and cognitive psychology.

Conclusion

AI systems don't learn in the same way humans do. They rely on identifying statistical patterns within data, a fundamental difference that leads to significant AI learning limitations. Understanding these limitations is crucial for responsible AI use. Become a more critical consumer of AI-generated information, approaching AI applications with a healthy dose of skepticism. Further your understanding by exploring resources on machine learning, AI ethics, and the ongoing quest for artificial general intelligence. Recognizing the limitations of AI learning is key to leveraging its benefits responsibly.

Featured Posts

-

Experience Authentic Italian Staten Island Nonna Restaurants

May 31, 2025

Experience Authentic Italian Staten Island Nonna Restaurants

May 31, 2025 -

A 30 Day Guide To Minimalist Living Tips And Tricks

May 31, 2025

A 30 Day Guide To Minimalist Living Tips And Tricks

May 31, 2025 -

Suge Knights Plea Diddy Should Testify To Show His Human Side

May 31, 2025

Suge Knights Plea Diddy Should Testify To Show His Human Side

May 31, 2025 -

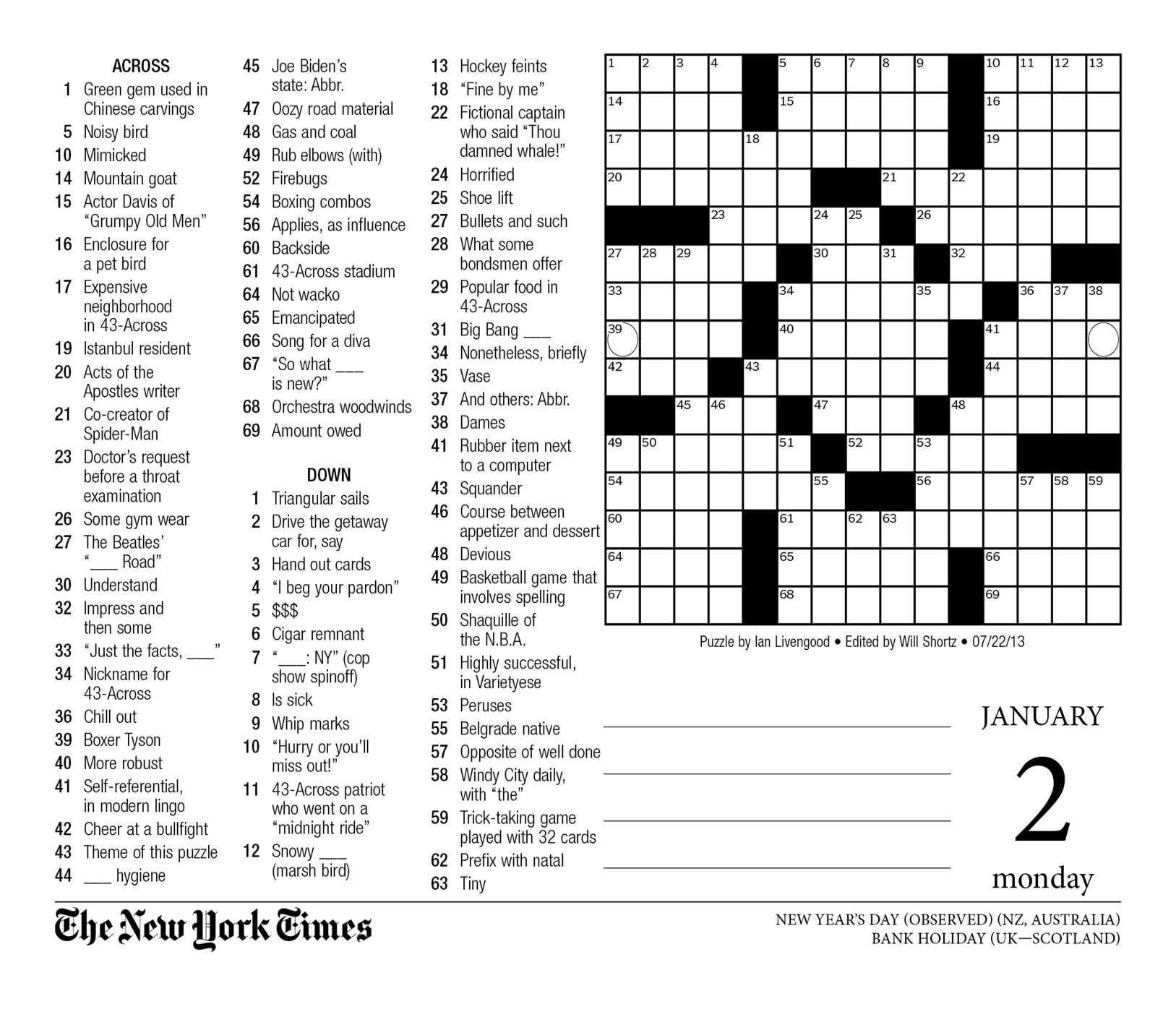

Todays Nyt Mini Crossword March 16 2025 Hints And Solutions

May 31, 2025

Todays Nyt Mini Crossword March 16 2025 Hints And Solutions

May 31, 2025 -

Wohnraummangel Ade Deutsche Stadt Wirbt Mit Kostenlosen Wohnungen

May 31, 2025

Wohnraummangel Ade Deutsche Stadt Wirbt Mit Kostenlosen Wohnungen

May 31, 2025