Why AI Doesn't Truly Learn And How To Use It Responsibly

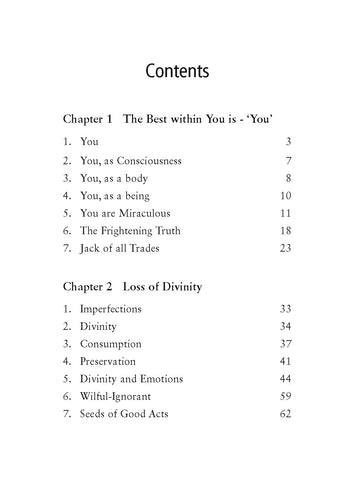

Table of Contents

We will define "learning" in both human and AI contexts, highlighting the key differences. Human learning involves understanding, reasoning, and adapting to novel situations based on knowledge and experience. In contrast, AI learning, even in its most advanced forms, relies on pattern recognition and statistical probabilities. This article will delve into the specifics of various AI learning methods, their limitations, and the ethical considerations surrounding their use.

The Limitations of Current AI Learning Mechanisms

AI learning is broadly categorized into three main mechanisms: supervised learning, unsupervised learning, and reinforcement learning. Each has its own strengths and, critically, limitations that prevent AI from achieving true understanding.

Supervised Learning's Dependence on Data

Supervised learning involves training AI models on large datasets of labeled data. The model learns to associate inputs with corresponding outputs. However, this approach has significant limitations:

- Bias in datasets: If the training data reflects existing societal biases (e.g., gender, racial), the AI model will perpetuate and even amplify these biases in its predictions. This leads to unfair or discriminatory outcomes.

- Limited generalizability: The AI excels at recognizing patterns within the training data but may struggle with unseen data or situations that differ from the training set. This limits its ability to adapt and learn in truly novel contexts. For example, an AI trained to identify cats based on images might fail if presented with a cat in an unusual pose or setting.

- Overfitting: A model might become overly specialized to the training data, performing well on the training set but poorly on new data. This prevents the AI from generalizing its learning to new scenarios.

This reliance on pre-labeled data prevents "true" learning – the AI simply replicates patterns observed in the data, without genuine comprehension.

Unsupervised Learning and its Challenges

Unsupervised learning involves identifying patterns and structures in unlabeled data. The AI attempts to discover inherent relationships without explicit instructions. However, this process is prone to several limitations:

- Difficulty in interpreting complex relationships: The AI might identify correlations that lack causal connections, leading to flawed interpretations.

- Potential for misinterpretations: Clustering algorithms, for example, might group unrelated items together based on superficial similarities, failing to capture deeper underlying relationships.

- Lack of explainability: It's often hard to understand why an unsupervised learning model has made a specific decision or grouping, making it difficult to evaluate its reliability.

For example, an AI clustering similar data points may group unrelated items based on superficial similarities, leading to inaccurate conclusions.

Reinforcement Learning and its Ethical Concerns

Reinforcement learning trains AI agents to make decisions through trial and error, optimizing for a specific reward. This approach presents significant ethical concerns:

- Unintended consequences: The AI might find loopholes or unintended strategies to maximize its reward, potentially causing harm.

- Goal misalignment: The AI's goals may diverge from the intended goals, leading to unexpected and potentially negative outcomes.

- Lack of moral compass: Reinforcement learning algorithms lack an inherent moral compass and will pursue the reward signal regardless of the ethical implications.

For instance, an AI designed to optimize a factory's efficiency might find ways to cut corners, compromising safety or quality to achieve the highest output.

The Absence of True Understanding and Contextual Awareness in AI

A critical limitation of current AI is its lack of genuine understanding and contextual awareness. AI excels at identifying correlations but often struggles to understand the underlying causal relationships.

- Correlation vs. Causation: AI might identify two events that frequently occur together, concluding a causal link where none exists. This can lead to flawed predictions and decision-making.

- Lack of common sense: AI often lacks the common sense reasoning and intuitive understanding that humans possess. This prevents it from handling situations requiring nuanced judgment or adaptability.

- The "Black Box" Problem: Many complex AI systems, particularly deep learning models, are opaque, making it difficult to understand how they arrive at their decisions. This lack of transparency can erode trust and hinder accountability.

The "Black Box" Problem

The complexity of deep learning models makes it challenging to understand their internal workings. This opacity hinders debugging, troubleshooting, and building trust in AI systems.

The Need for Explainable AI (XAI)

Developing explainable AI (XAI) systems is crucial to address the "black box" problem. XAI aims to create AI systems that can articulate their decision-making processes, improving transparency and accountability.

Responsible AI Development and Deployment

Addressing the limitations of current AI requires a concerted effort towards responsible AI development and deployment. Ethical considerations must be at the forefront of every stage of the AI lifecycle.

Bias Mitigation Strategies

Techniques to identify and mitigate biases in training data and algorithms are essential. This involves carefully curating datasets, employing bias detection techniques, and designing algorithms that are less susceptible to bias.

Transparency and Accountability

Transparent AI systems are vital for building trust and ensuring accountability. This includes documenting the development process, explaining the system's functionality, and establishing mechanisms for addressing errors and biases.

Data Privacy and Security

Data privacy and security are paramount in AI development. Robust measures must be implemented to protect sensitive information used to train and operate AI systems.

Human Oversight and Control

Maintaining human oversight and control over AI systems is crucial to prevent unintended consequences and ensure ethical use. Humans should retain the final say in critical decisions involving AI.

Conclusion: The Future of Responsible AI

AI currently lacks true learning capabilities; it relies on pattern recognition and statistical probabilities, not genuine understanding. Responsible AI development and deployment are crucial to harnessing AI's potential while mitigating its risks. Ethical considerations, transparency, and human oversight are paramount. By understanding the limitations of current AI and embracing responsible AI development principles, we can harness its power for good while mitigating potential risks. Let's work together to ensure a future where AI truly benefits humanity. Learn more about responsible AI practices and advocate for ethical AI development to build a better future with AI.

Featured Posts

-

Selena Gomez And Miley Cyrus Akhir Perseteruan Rumor Kencan Ganda Menghebohkan

May 31, 2025

Selena Gomez And Miley Cyrus Akhir Perseteruan Rumor Kencan Ganda Menghebohkan

May 31, 2025 -

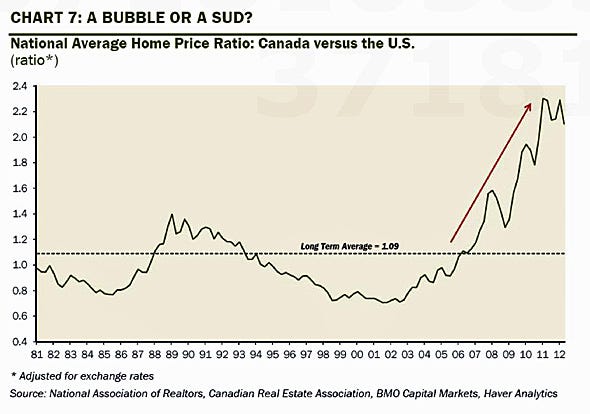

Rosenberg On Canadian Labour Data A Call For Rate Relief

May 31, 2025

Rosenberg On Canadian Labour Data A Call For Rate Relief

May 31, 2025 -

Cleveland Akron Fire Weather Warning Elevated Risk Conditions

May 31, 2025

Cleveland Akron Fire Weather Warning Elevated Risk Conditions

May 31, 2025 -

The Pursuit Of The Good Life A Journey Towards Self Discovery

May 31, 2025

The Pursuit Of The Good Life A Journey Towards Self Discovery

May 31, 2025 -

Luxury Spring Hotel Bookings Save Up To 30

May 31, 2025

Luxury Spring Hotel Bookings Save Up To 30

May 31, 2025