Are Tech Companies To Blame When Algorithms Radicalize Mass Shooters? A Critical Analysis

Table of Contents

The Role of Social Media Algorithms in Echo Chambers and Filter Bubbles

Algorithms designed to maximize engagement can inadvertently amplify extremist viewpoints, creating echo chambers and filter bubbles. This algorithm radicalization process significantly contributes to the problem.

Amplification of Extremist Content:

Algorithms prioritize content that generates high engagement, often leading to the amplification of extremist narratives. This creates a dangerous feedback loop where users are increasingly exposed to reinforcing viewpoints, solidifying their beliefs and potentially pushing them toward radicalization.

- Targeted advertising of extremist groups: Sophisticated targeting allows extremist groups to reach vulnerable individuals directly with their propaganda.

- Recommendation systems promoting radical content: Algorithms suggest related videos, articles, and groups, leading users down a rabbit hole of increasingly extreme content.

- Lack of effective content moderation: The sheer volume of online content makes it challenging for platforms to effectively identify and remove extremist material. This lack of moderation contributes directly to the proliferation of harmful ideologies.

The Psychology of Online Radicalization:

The psychological impact of algorithms on radicalization cannot be ignored. Algorithms exploit cognitive biases to reinforce extremism.

- Reinforcement of pre-existing beliefs through targeted content: Algorithms serve up content that confirms users' existing biases, strengthening their commitment to those beliefs.

- Increased feelings of belonging and validation within online extremist communities: Algorithms create spaces where like-minded individuals can connect, fostering a sense of belonging and validation that can fuel radicalization.

- Desensitization to violence through repeated exposure: Constant exposure to violent content, even passively through algorithmic suggestion, can desensitize individuals to violence and normalize it as a means to an end.

Responsibility and Accountability: Who is to Blame?

The question of responsibility is complex, involving both tech companies and individuals. Understanding the roles of each is crucial for effective solutions.

Tech Companies' Moral and Legal Obligations:

Tech companies face significant ethical dilemmas in balancing free speech principles with the prevention of harm. Existing legal frameworks often struggle to address this challenge.

- Section 230 of the Communications Decency Act (or equivalent in other jurisdictions) and its implications: This legislation protects online platforms from liability for user-generated content, but its applicability in the context of algorithm-driven radicalization is increasingly debated.

- Challenges in identifying and removing extremist content effectively and swiftly: Identifying and removing extremist content is a constant battle against sophisticated techniques used by extremist groups to evade detection.

- The potential for legal action against tech companies for negligence: As the understanding of algorithms’ role in radicalization improves, the possibility of legal action against tech companies for negligence increases.

The Individual's Agency and Responsibility:

While algorithms play a significant role, individuals also bear responsibility for their online choices. This emphasizes the need for individual empowerment and media literacy.

- Critical thinking and media literacy as crucial safeguards: Individuals need to develop critical thinking skills to evaluate the credibility and bias of online information.

- The importance of personal responsibility in navigating online spaces: Users must actively choose to engage with responsible content and avoid echo chambers.

- The need for education and awareness campaigns: Public awareness campaigns are crucial in promoting media literacy and critical thinking skills.

Mitigating the Risk: Potential Solutions and Strategies

Addressing algorithm radicalization mass shooters requires a multi-faceted approach, incorporating both technological and societal solutions.

Improved Algorithm Design and Content Moderation:

Algorithms need to be redesigned to prioritize safety and well-being over engagement metrics. This requires significant changes in how social media platforms operate.

- AI-powered detection and removal of extremist content: Utilizing AI to identify and remove harmful content is essential, but requires constant improvement and adaptation to avoid bias and censorship.

- Human oversight and review processes for flagged content: Human review is crucial for ensuring accuracy and fairness in content moderation.

- Collaboration between tech companies and researchers to develop better detection methods: Effective solutions require collaboration between industry leaders and researchers in fields like AI, psychology, and sociology.

Promoting Media Literacy and Critical Thinking:

Equipping individuals with the skills to critically evaluate online information is critical to combatting online radicalization.

- Educational programs in schools and communities: Media literacy education should be integrated into school curricula and community outreach programs.

- Public awareness campaigns on online safety and radicalization: Public awareness campaigns can educate individuals about the risks of online radicalization and provide strategies for self-protection.

- Collaboration with mental health professionals to address underlying vulnerabilities: Addressing underlying psychological vulnerabilities that make individuals susceptible to radicalization is crucial.

Conclusion

The question of whether tech companies bear responsibility when algorithms contribute to the radicalization of mass shooters is complex and multifaceted. While algorithms are tools that can be misused, the responsibility is not solely on tech companies. Improved algorithm design, effective content moderation, and a strong emphasis on media literacy are crucial steps toward mitigating the risk. However, individual agency and responsibility remain vital components in combating online radicalization. We need a multi-pronged approach involving tech companies, governments, educational institutions, and individuals to address this growing problem effectively. Further research and ongoing discussion on the impact of algorithm radicalization in mass shooters are essential to finding lasting solutions. We must work together to prevent the tragic consequences of algorithm radicalization and create safer online spaces.

Featured Posts

-

Over 100 Firefighters Battle Major Shop Blaze In East London

May 31, 2025

Over 100 Firefighters Battle Major Shop Blaze In East London

May 31, 2025 -

Nikola Jokics One Handed Flick A Key Moment In Nuggets Blowout Victory

May 31, 2025

Nikola Jokics One Handed Flick A Key Moment In Nuggets Blowout Victory

May 31, 2025 -

Ingenierie Castor Testee Succes Dans Deux Cours D Eau Dromois

May 31, 2025

Ingenierie Castor Testee Succes Dans Deux Cours D Eau Dromois

May 31, 2025 -

Monte Carlo Masters Alcaraz Triumphs Over Davidovich Fokina Advances To Final

May 31, 2025

Monte Carlo Masters Alcaraz Triumphs Over Davidovich Fokina Advances To Final

May 31, 2025 -

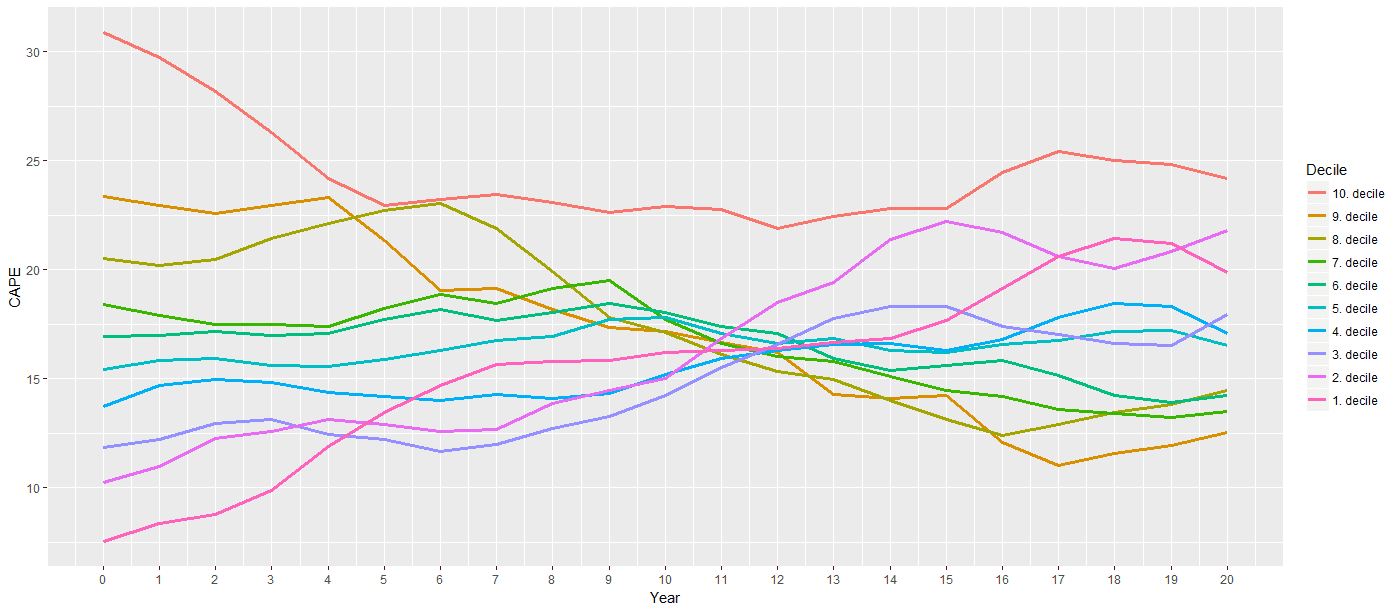

Why Current Stock Market Valuations Arent A Cause For Investor Alarm Bof A

May 31, 2025

Why Current Stock Market Valuations Arent A Cause For Investor Alarm Bof A

May 31, 2025