Character AI Chatbots And Free Speech: A Legal Grey Area

Table of Contents

The First Amendment and AI-Generated Content

Defining "Speech" in the AI Context

Does the First Amendment, which protects freedom of speech in the United States, protect the output of an AI chatbot? This question presents significant legal nuances. Defining "speech" when it's generated by an algorithm requires careful consideration of several factors:

- The role of the programmer: The initial design and coding of the AI significantly influence its output. Does the programmer's intent play a role in determining whether the generated content is protected speech?

- The user's prompts: User input acts as a catalyst for the AI's response. To what extent does the user's prompt shape the content and influence its protection under free speech principles?

- The AI's autonomous learning: As AI models learn and evolve through machine learning, their responses become increasingly less predictable. How do we assess the responsibility for content generated through this autonomous learning process?

A crucial distinction may lie between AI-generated hate speech and human-generated hate speech. While human-generated hate speech clearly falls under existing legal frameworks, the legal implications of AI-generated hate speech are far less clear. The question of intent and agency becomes paramount.

Platform Responsibility vs. User Responsibility

Who bears the responsibility for illegal or harmful content generated by a Character AI chatbot? Section 230 of the Communications Decency Act (CDA) in the US provides significant legal protection to online platforms for user-generated content, but its applicability to AI-generated content is debated.

- Case law related to online platforms and content moderation: Existing case law focuses largely on human-generated content. Applying these precedents to AI-generated content requires careful analysis of the unique characteristics of AI systems.

- Challenges of content moderation at scale for AI-generated content: Moderating AI-generated content presents unprecedented scalability challenges. The sheer volume and unpredictable nature of the content make traditional moderation techniques inadequate.

The debate centers on whether Character AI, as a platform, should be held responsible for the content generated by its AI, even if it's generated in response to user prompts.

Character AI's Specific Policies and Practices

Character AI's Terms of Service and Content Moderation

Character AI, like other AI chatbot platforms, has established terms of service outlining prohibited content. Understanding the effectiveness of their content moderation systems is crucial.

- Examples of prohibited content: Character AI's terms likely prohibit content such as hate speech, harassment, threats, incitement to violence, and illegal activities. The specific wording and enforcement of these prohibitions are key factors.

- Transparency of content moderation processes: The lack of transparency regarding Character AI's moderation processes raises concerns. Users need to understand how decisions are made and have avenues to appeal them.

The effectiveness of Character AI’s content moderation heavily influences its legal standing and user trust.

Enforcement and Accountability

Character AI needs mechanisms to enforce its terms of service and provide accountability.

- Effectiveness of reporting and appeals process: How easily can users report violations, and how responsive is Character AI to these reports? The efficiency and fairness of the appeals process are crucial.

- Potential for bias in content moderation algorithms: AI-powered content moderation systems are susceptible to bias present in their training data. This bias can lead to unfair or discriminatory outcomes.

Addressing these concerns is critical for ensuring fairness and building user confidence.

Emerging Legal Challenges and Future Implications

The Need for Clearer Legal Frameworks

The rapid development of AI chatbots like Character AI necessitates updated laws and regulations.

- Potential legislative approaches: Legislators must consider various approaches, such as content-based restrictions, platform liability rules, or AI-specific regulations.

- Impact on innovation and freedom of expression: Balancing legal regulation with the need to protect free speech and foster innovation is a critical challenge.

A carefully crafted legal framework is essential for navigating this complex terrain.

International Legal Differences

The legal landscape surrounding AI-generated content varies significantly across jurisdictions.

- Comparison of legal frameworks: Understanding the nuances of different legal frameworks is crucial for global platforms like Character AI.

- Complexities of enforcing international regulations: Enforcing international regulations on AI chatbots is a significant challenge, requiring international cooperation and harmonization.

The global nature of AI demands international collaboration on legal standards.

Conclusion

The intersection of Character AI chatbots and free speech presents a complex and evolving legal landscape. While the First Amendment provides a foundation for free expression, the unique characteristics of AI-generated content necessitate careful consideration of platform responsibility, content moderation, and the need for updated legal frameworks. The ongoing debate surrounding Character AI and similar platforms highlights the urgent need for clearer regulations that balance the protection of free speech with the prevention of harm. To stay informed about the latest developments in this critical area, continue researching the evolving legal landscape surrounding Character AI and AI chatbot free speech. Further investigation into the legal implications of AI-generated content is crucial for navigating this evolving digital terrain.

Featured Posts

-

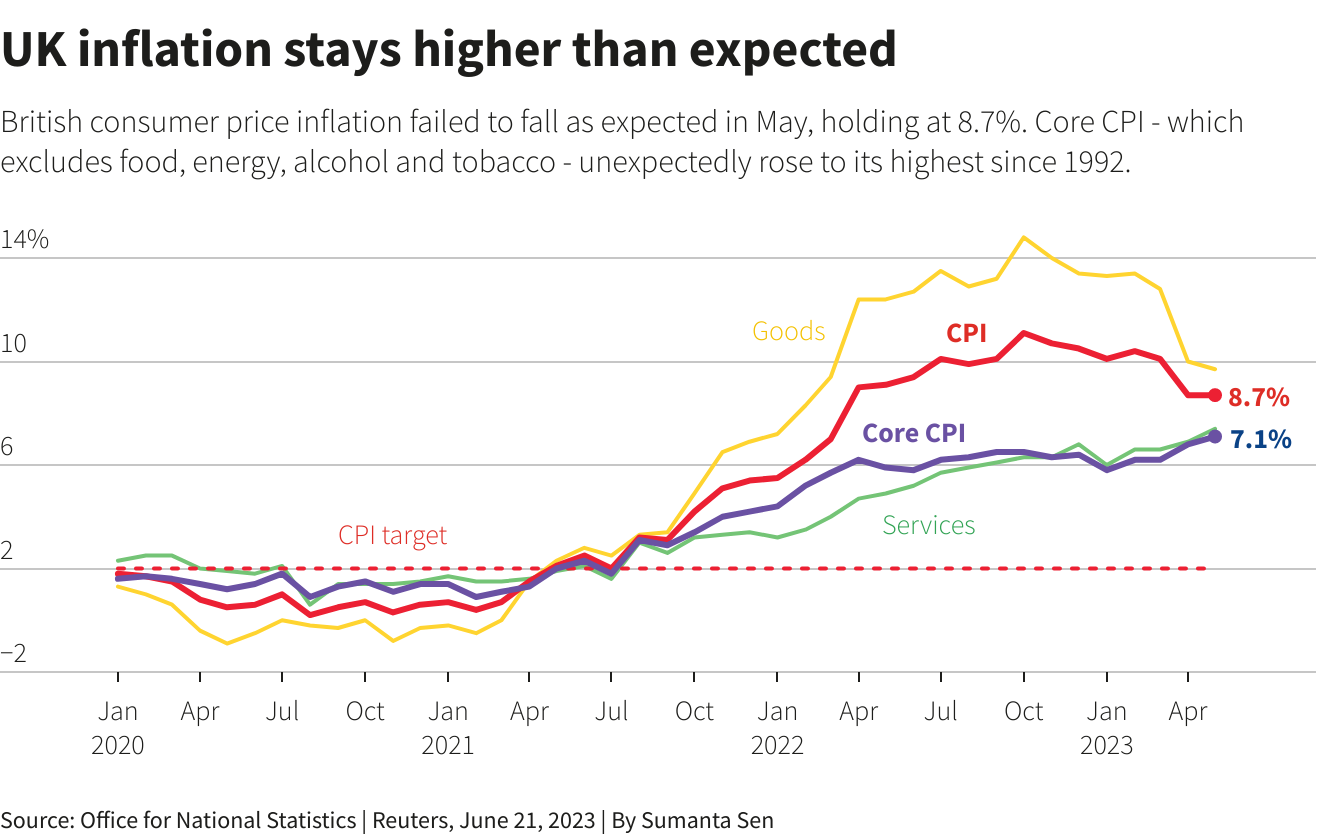

Uk Inflation Slows Impact On Boe Rate Cuts And The Pound

May 23, 2025

Uk Inflation Slows Impact On Boe Rate Cuts And The Pound

May 23, 2025 -

Is Succession Sky Atlantic Hd Worth The Hype A Critical Analysis

May 23, 2025

Is Succession Sky Atlantic Hd Worth The Hype A Critical Analysis

May 23, 2025 -

Burclar Ve Zeka Hangi Burclar Daha Zekidir

May 23, 2025

Burclar Ve Zeka Hangi Burclar Daha Zekidir

May 23, 2025 -

Canada Post Strike Averted Details On The New Offer

May 23, 2025

Canada Post Strike Averted Details On The New Offer

May 23, 2025 -

Unbeaten Half Century By Shanto Bangladeshs Advantage In Rain Shortened Game

May 23, 2025

Unbeaten Half Century By Shanto Bangladeshs Advantage In Rain Shortened Game

May 23, 2025