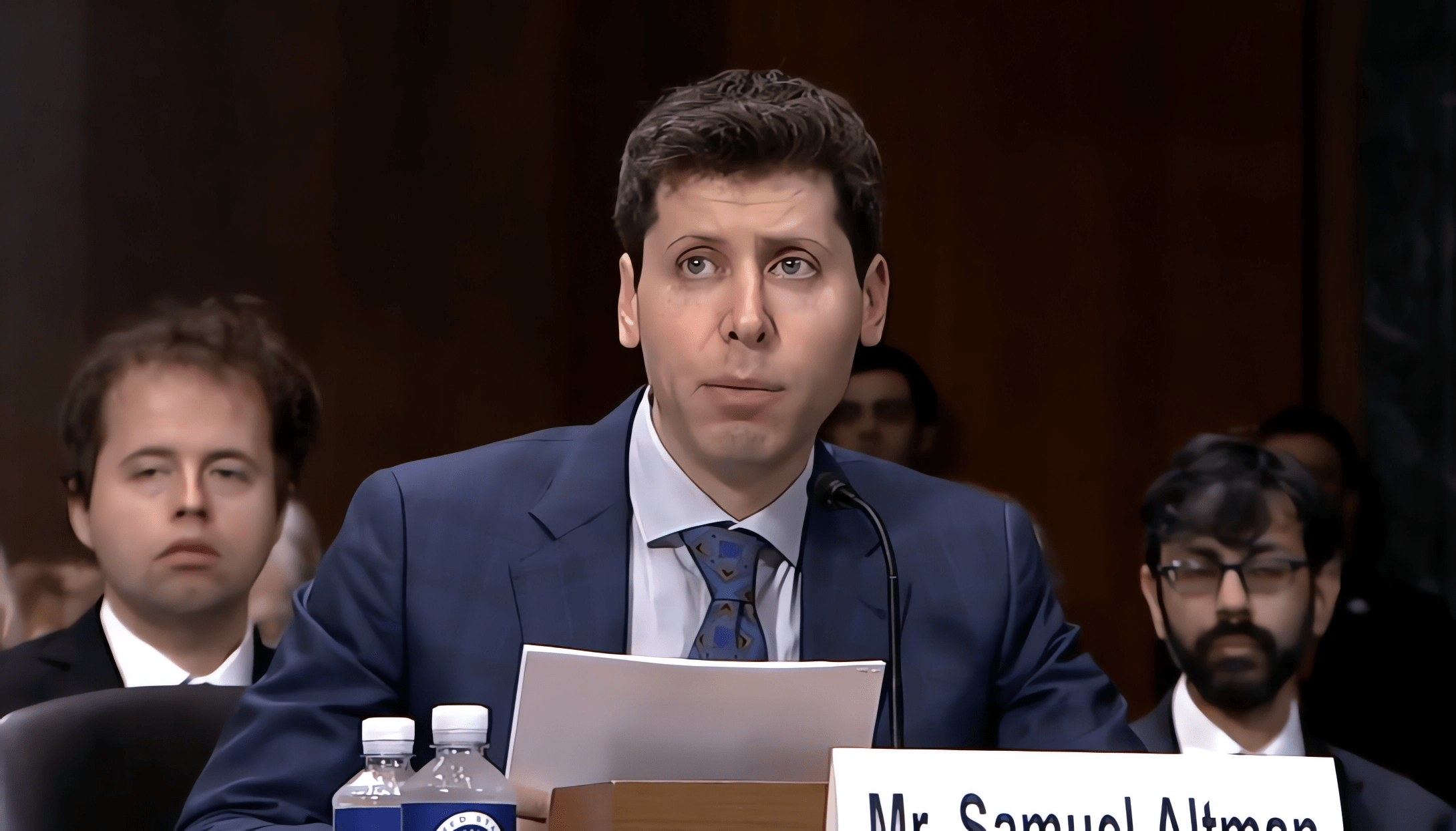

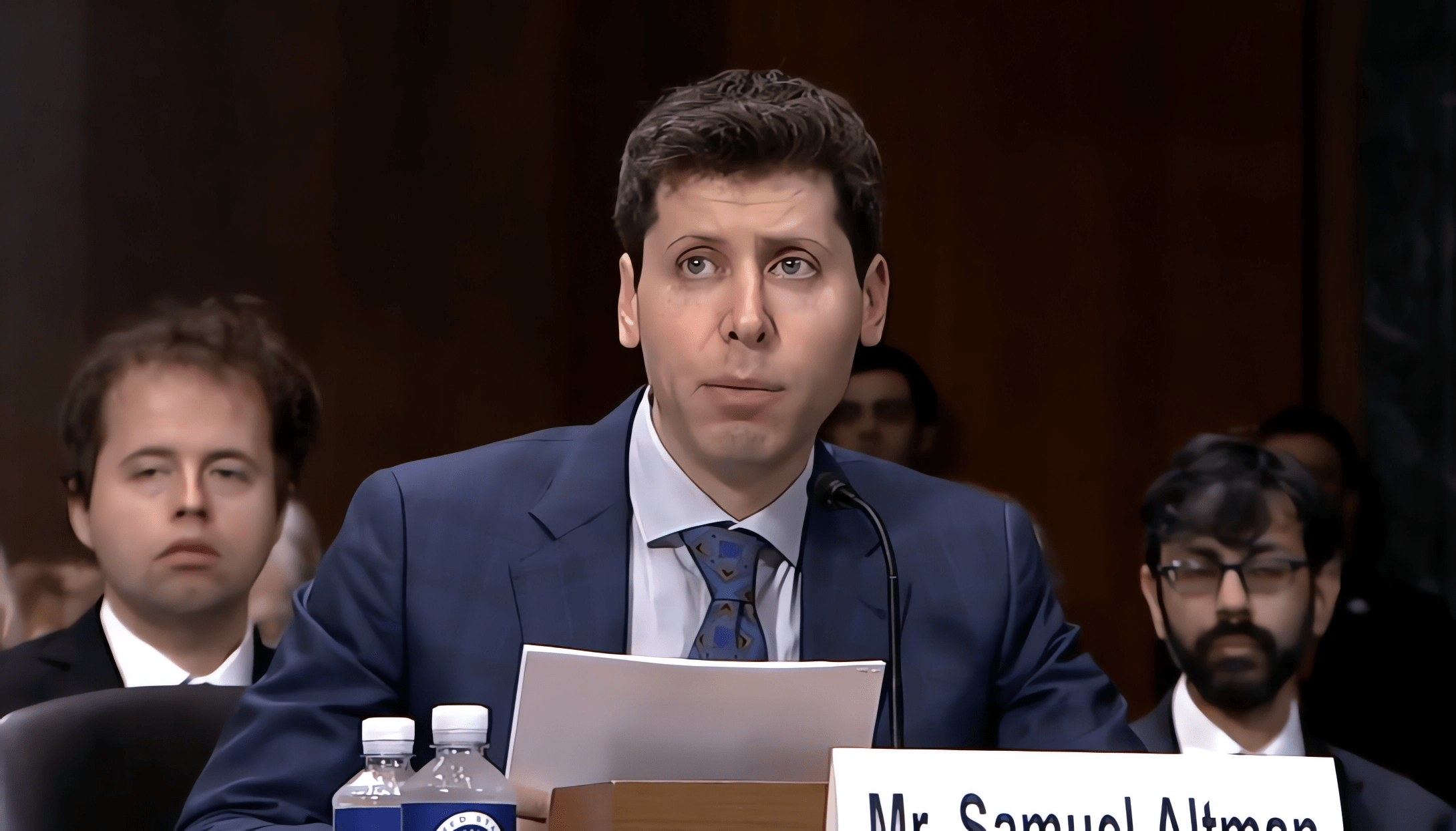

FTC Investigates OpenAI's ChatGPT: What It Means For AI Development

Table of Contents

The FTC's Concerns Regarding ChatGPT and Data Privacy

The FTC's investigation into OpenAI centers on concerns surrounding ChatGPT's data handling practices and potential violations of consumer protection laws. The agency is scrutinizing whether OpenAI's collection, use, and storage of personal data comply with existing regulations, particularly focusing on potential unfair or deceptive trade practices.

- Unfair or deceptive trade practices related to data handling: The FTC is investigating whether OpenAI adequately informs users about how their data is collected, used, and protected. This includes examining the transparency of OpenAI's data policies and whether users have sufficient control over their data.

- Potential violations of COPPA (Children's Online Privacy Protection Act): Given ChatGPT's accessibility and potential use by children, the FTC is likely assessing whether OpenAI complies with COPPA's stringent requirements for protecting children's personal information online.

- Concerns about the accuracy and bias in the data used to train ChatGPT: The FTC may be examining the data sources used to train ChatGPT, investigating potential biases embedded within the data and the resulting impact on the chatbot's outputs. Accuracy and fairness in AI models are crucial considerations for the FTC.

- Lack of transparency regarding data usage: Concerns exist about the lack of clarity regarding how user data is used to improve ChatGPT's performance and whether this aligns with user expectations and consent.

The potential penalties OpenAI faces are substantial, ranging from financial fines to restrictions on its operations. This case sets a crucial precedent for other AI companies, underscoring the need for robust data privacy measures and transparency in AI development.

Impact on AI Development and Innovation

The FTC's investigation into OpenAI's ChatGPT is likely to have a significant impact on the pace and direction of AI development. While some fear a chilling effect on innovation, others see it as a necessary step towards responsible AI practices.

- Increased regulatory scrutiny on AI models: The investigation signals a broader trend toward increased government oversight of AI models and their potential societal impacts. Expect more stringent regulations and compliance requirements across the AI industry.

- Slowdown in the release of new AI technologies: Companies may adopt a more cautious approach to releasing new AI technologies, prioritizing thorough risk assessments and compliance checks to avoid regulatory penalties.

- Higher development costs due to increased compliance requirements: The costs associated with ensuring data privacy, addressing bias, and complying with future regulations will inevitably increase AI development expenses.

- Focus shift towards more ethical and responsible AI development: The investigation encourages a shift in priorities, emphasizing ethical considerations and responsible AI practices alongside technological advancements.

However, the investigation can also foster positive change. Increased scrutiny may lead to the development of better data privacy practices, more robust AI models, and a stronger focus on user rights.

The Future of AI Regulation in the Wake of the ChatGPT Investigation

The FTC's actions against OpenAI are likely to accelerate the development of AI regulations globally. The investigation highlights the urgent need for clear guidelines and oversight to manage the risks associated with powerful AI technologies.

- Increased government oversight of AI algorithms: Expect more government intervention in the design, development, and deployment of AI algorithms, focusing on transparency, accountability, and fairness.

- The need for standardized ethical guidelines for AI development: International collaboration is crucial to establish widely accepted ethical guidelines for AI development and deployment, ensuring consistency and preventing regulatory fragmentation.

- Establishment of independent AI auditing bodies: Independent bodies may be established to audit AI systems for bias, fairness, and compliance with data privacy regulations, providing an independent assessment of AI model risks.

- Potential for new legislation specifically targeting large language models (LLMs): Given the unique capabilities and potential risks of LLMs like ChatGPT, expect the emergence of new legislation specifically tailored to regulate this rapidly evolving area of AI.

International cooperation will be essential in harmonizing AI regulations and preventing a patchwork of conflicting laws that could stifle innovation and create uneven playing fields for AI developers.

Responsible AI Development: Lessons Learned from the ChatGPT Investigation

The FTC's investigation underscores the critical importance of responsible AI development practices. Prioritizing ethical considerations from the outset is no longer optional but a necessity.

- Prioritizing data privacy and security: Implementing robust data privacy and security measures should be paramount in all stages of AI development, ensuring compliance with relevant regulations and protecting user data.

- Addressing bias and ensuring fairness in AI systems: Developers must actively work to identify and mitigate bias in their AI systems, ensuring fairness and equity in their outcomes.

- Implementing robust testing and validation processes: Thorough testing and validation are critical to identify and address potential problems before AI systems are deployed, minimizing the risk of unintended consequences.

- Promoting transparency and user control over data: Transparency is crucial. Users need to understand how their data is being used and have control over their personal information.

By adopting these best practices, AI developers can help mitigate the risks associated with AI technologies and build trust with users and regulators.

Conclusion: Navigating the Future of AI Development Post-ChatGPT Investigation

The FTC's investigation into OpenAI's ChatGPT marks a pivotal moment for the AI industry. The investigation highlights the FTC's concerns about data privacy violations, the potential for unfair trade practices, and the need for increased regulatory oversight. This case will significantly influence the future of AI development, prompting increased scrutiny, higher development costs, and a greater focus on ethical and responsible AI practices. The "FTC Investigates OpenAI's ChatGPT" case serves as a landmark event, shaping the future of AI regulation and emphasizing the crucial need for responsible innovation.

To navigate this evolving landscape, it's essential to stay informed about developments in AI regulation, advocate for responsible AI practices, and participate in the ongoing conversation about the ethical implications of AI technologies. Further research into FTC guidelines on data privacy, resources on AI ethics, and best practices for responsible AI development are highly recommended. The future of AI depends on our collective commitment to building AI systems that are both innovative and ethically sound.

Featured Posts

-

Ev Mandate Backlash Car Dealerships Renew Their Resistance

Apr 28, 2025

Ev Mandate Backlash Car Dealerships Renew Their Resistance

Apr 28, 2025 -

Virginia Giuffres Passing Impact On Prince Andrew And Epstein Investigations

Apr 28, 2025

Virginia Giuffres Passing Impact On Prince Andrew And Epstein Investigations

Apr 28, 2025 -

Times Trump Interview 9 Key Takeaways On Annexing Canada Xis Calls And Third Term Loopholes

Apr 28, 2025

Times Trump Interview 9 Key Takeaways On Annexing Canada Xis Calls And Third Term Loopholes

Apr 28, 2025 -

Virginia Giuffre Dead A Legacy Of Allegations Against Powerful Figures

Apr 28, 2025

Virginia Giuffre Dead A Legacy Of Allegations Against Powerful Figures

Apr 28, 2025 -

As Markets Swooned Pros Sold And Individuals Pounced A Market Analysis

Apr 28, 2025

As Markets Swooned Pros Sold And Individuals Pounced A Market Analysis

Apr 28, 2025

Latest Posts

-

Yukon Legislature Mine Managers Testimony Sparks Contempt Threat

Apr 28, 2025

Yukon Legislature Mine Managers Testimony Sparks Contempt Threat

Apr 28, 2025 -

Contempt Of Parliament Yukon Politicians Confront Mine Manager

Apr 28, 2025

Contempt Of Parliament Yukon Politicians Confront Mine Manager

Apr 28, 2025 -

Yukon Mine Manager Faces Contempt After Refusal To Answer Questions

Apr 28, 2025

Yukon Mine Manager Faces Contempt After Refusal To Answer Questions

Apr 28, 2025 -

Yukon Politicians Threaten Contempt Charges Over Mine Managers Evasive Answers

Apr 28, 2025

Yukon Politicians Threaten Contempt Charges Over Mine Managers Evasive Answers

Apr 28, 2025 -

Teslas Rise Lifts Us Stocks Tech Giants Power Market Growth

Apr 28, 2025

Teslas Rise Lifts Us Stocks Tech Giants Power Market Growth

Apr 28, 2025