Understanding AI's Learning Limitations For Ethical And Responsible Use

Table of Contents

Data Bias and its Impact on AI Learning

AI systems, at their core, are learning machines. They learn from the data they are fed during training. This means that if the training data reflects existing societal biases – whether conscious or unconscious – the resulting AI system will inevitably inherit and amplify those biases. This phenomenon, known as AI bias or algorithmic bias, has far-reaching consequences. Data bias manifests in many ways, leading to unfair and discriminatory outcomes.

-

AI bias is a significant problem across various applications:

- Facial recognition systems: Studies have shown that these systems exhibit higher error rates for individuals with darker skin tones, highlighting the impact of biased datasets in the training process.

- Loan applications: AI-powered loan applications may disproportionately deny loans to certain demographic groups if the training data reflects historical lending practices that discriminated against those groups.

- Recruitment processes: AI-driven recruitment tools may perpetuate gender or racial biases present in historical hiring data, leading to unfair and discriminatory outcomes.

-

Mitigation strategies are essential to address AI bias:

- Data augmentation: Supplementing biased datasets with more representative data can help reduce bias.

- Bias detection algorithms: Developing sophisticated algorithms to identify and quantify biases within datasets is crucial.

- Diverse and representative datasets: Ensuring that training data accurately reflects the diversity of the population is paramount. This includes considering factors like gender, race, ethnicity, age, and socioeconomic status.

The Limitations of Current AI Architectures

Many current AI architectures, particularly deep learning models, operate as "black boxes." This means it's incredibly difficult to understand the internal processes by which these models arrive at their conclusions. This lack of AI transparency poses significant challenges for explainable AI (XAI) and poses a serious hurdle to accountability. Understanding how a system reaches a particular decision is vital for identifying errors and biases, ensuring fairness, and building trust.

- Key challenges related to AI architecture limitations:

- Deep learning models' opacity: The complexity of deep learning models often makes it impossible to trace the reasoning behind their predictions. This opacity makes it challenging to pinpoint the source of errors or biases.

- Lack of transparency: The inability to understand the decision-making process of an AI system can erode trust and hinder its acceptance by users and stakeholders.

- Explainable AI (XAI): Research in XAI aims to develop more interpretable and transparent AI models, but this remains a significant challenge.

- The need for interpretable models: For ethical and responsible use of AI, especially in high-stakes applications, transparent and readily interpretable models are crucial.

The Problem of Generalization and Out-of-Distribution Data

AI models are typically trained on large datasets representing specific tasks or domains. However, real-world scenarios are rarely confined to the narrow parameters of these training datasets. When presented with out-of-distribution data – data that differs significantly from the training data – AI models can struggle to generalize their knowledge and make accurate predictions. This limitation raises concerns about the AI robustness and reliability of these systems.

- Challenges related to generalization and out-of-distribution data:

- Model overfitting: Overfitting occurs when a model performs exceptionally well on the training data but poorly on unseen data, indicating a failure to generalize.

- Poor performance on unseen data: AI models may produce unreliable or inaccurate results when faced with novel or unexpected situations.

- Robustness is crucial: For AI systems to be truly reliable and safe, they must be robust enough to handle variations and uncertainties in real-world conditions.

- Techniques for improving generalization: Strategies such as data augmentation, transfer learning, and adversarial training can help improve the generalization capabilities of AI models.

The Ethical Implications of AI Learning Limitations

The limitations discussed above have profound ethical implications. Understanding these limitations is not merely a technical challenge; it’s a fundamental prerequisite for the responsible development and deployment of AI systems. Failure to address these limitations can lead to unfairness, discrimination, and a significant erosion of public trust.

- Key ethical considerations concerning AI learning limitations:

- Unintended consequences: A lack of understanding of AI limitations can lead to unforeseen and potentially harmful consequences.

- Ethical guidelines and frameworks: The development of clear ethical guidelines and frameworks is crucial to guide the responsible use of AI.

- AI governance and regulation: Robust governance frameworks and regulations are needed to ensure accountability and mitigate risks.

- Promoting responsible AI: Education and awareness are crucial for promoting responsible AI practices among developers, users, and the public.

Conclusion: Navigating the Path to Ethical and Responsible AI

Understanding AI learning limitations – including data bias, architectural limitations, and the challenges of generalization – is not an impediment to AI innovation, but rather a crucial step towards its ethical and responsible development. By acknowledging these constraints, we can work toward creating AI systems that are fair, transparent, reliable, and ultimately beneficial to society. Further research into these limitations, coupled with a strong ethical framework, is essential to ensure the safe and beneficial integration of AI into our lives. Learn more about mitigating the risks associated with AI learning limitations and contribute to the development of ethical and responsible AI. Engage in discussions about AI ethics and advocate for responsible AI practices. The future of AI hinges on our ability to address these challenges proactively.

Featured Posts

-

Delaying Ecb Rate Cuts Economists Issue Warning

May 31, 2025

Delaying Ecb Rate Cuts Economists Issue Warning

May 31, 2025 -

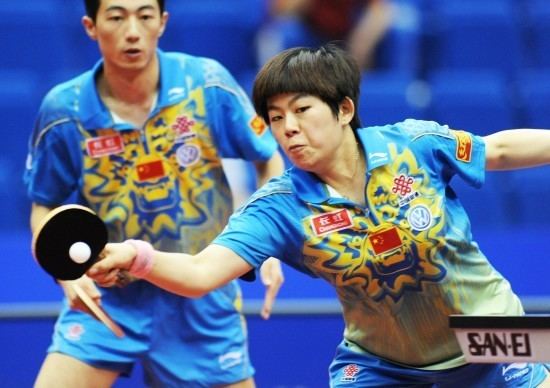

Table Tennis Worlds Wang Sun Secure Third Straight Mixed Doubles Gold

May 31, 2025

Table Tennis Worlds Wang Sun Secure Third Straight Mixed Doubles Gold

May 31, 2025 -

Major East London High Street Fire Requires Over 100 Firefighters

May 31, 2025

Major East London High Street Fire Requires Over 100 Firefighters

May 31, 2025 -

Cocaine Found At White House Secret Service Ends Probe

May 31, 2025

Cocaine Found At White House Secret Service Ends Probe

May 31, 2025 -

Wildfires Ignite Early Canada And Minnesota On High Alert

May 31, 2025

Wildfires Ignite Early Canada And Minnesota On High Alert

May 31, 2025