New CNIL Guidelines On AI: A Practical Guide For Compliance

Table of Contents

Understanding the Scope of the New CNIL AI Guidelines

The CNIL's updated guidelines provide crucial clarity on AI regulation in France. Understanding their scope is the first step toward compliance.

Defining "AI" within the CNIL Framework:

The CNIL framework doesn't offer a single, rigid definition of AI. Instead, it focuses on the functionality of the system. This means the guidelines apply to a wide range of technologies, focusing on systems exhibiting automated decision-making or processing personal data in a significant way.

- Examples of AI systems included: Machine learning algorithms, deep learning networks used for image recognition, natural language processing systems (e.g., chatbots), recommendation engines, and automated decision-making systems in areas like loan applications or recruitment.

- Exclusion criteria: Simple rule-based systems without learning capabilities generally fall outside the scope. However, the line can be blurry, and it’s best to err on the side of caution.

- Specific mention of high-risk AI systems: The CNIL guidelines pay particular attention to AI systems deemed high-risk, such as those used in law enforcement, healthcare, or credit scoring. These systems face heightened scrutiny and require more stringent compliance measures.

Key Principles for AI Development and Deployment:

The CNIL guidelines are built upon several key principles, ensuring ethical and responsible AI development. These principles are not independent but rather interconnected and complementary.

- Purpose limitation: AI systems should only be used for pre-defined, legitimate purposes specified during the design and development phase. Avoid using data for purposes not explicitly stated.

- Data minimization: Collect and process only the minimum amount of personal data necessary to achieve the defined purpose. Avoid data over-collection.

- Accuracy: Ensure the data used to train and operate AI systems is accurate, complete, and up-to-date. Regularly audit data quality.

- Security: Implement robust security measures to protect AI systems and the personal data they process from unauthorized access, use, disclosure, alteration, or destruction. This includes encryption and access controls.

Data Protection and Privacy in the Age of AI: Adherence to GDPR

The CNIL AI guidelines are firmly rooted in the General Data Protection Regulation (GDPR). Understanding GDPR is essential for compliance.

Data Minimization and Purpose Limitation:

Implementing data minimization and respecting purpose limitation is critical for responsible AI.

- Strategies for data minimization: Use pseudonymisation or anonymisation techniques wherever possible. Employ differential privacy methods to protect individual identities.

- Techniques for defining precise purposes: Clearly articulate the specific goals of your AI system. Document these purposes and ensure all data processing activities directly support them.

- Methods for obtaining informed consent: Secure freely given, specific, informed, and unambiguous consent from individuals before collecting and processing their personal data for AI purposes.

Transparency and Explainability of AI Systems:

The "black box" nature of some AI systems is a major concern. The CNIL emphasizes explainability.

- Techniques for creating transparent AI systems: Use interpretable machine learning models where possible. Document the design, training data, and decision-making process of your AI system.

- Methods for communicating AI processes to users: Provide clear and accessible explanations to individuals about how the AI system processes their data and what decisions it makes. Consider using plain language and visual aids.

- Importance of providing redress mechanisms: Establish clear procedures for individuals to challenge AI-driven decisions and seek redress if necessary.

Automated Decision-Making and Rights of Individuals:

Automated individual decision-making (AIDM) requires careful consideration of individual rights.

- Strategies for mitigating risks associated with AIDM: Implement human oversight to review and potentially override automated decisions, especially in high-stakes situations. Conduct regular audits of AIDM systems.

- Methods for ensuring human oversight: Establish clear roles and responsibilities for human review of automated decisions. Ensure humans have the authority to intervene and correct errors.

- Procedures for handling challenges to automated decisions: Create a transparent and accessible process for individuals to challenge AI-driven decisions and request an explanation.

Practical Steps for CNIL AI Guideline Compliance

Achieving compliance requires a proactive and multi-faceted approach.

Conducting a Data Protection Impact Assessment (DPIA):

For high-risk AI systems, a DPIA is mandatory.

- Steps involved in a DPIA: Identify the AI system, assess the risks to individuals' rights and freedoms, implement appropriate mitigation measures, document the assessment, and monitor its effectiveness.

- Factors to consider: The type of data processed, the sensitivity of the data, the nature of the automated decision-making, and the potential impact on individuals.

- Documentation requirements: Maintain detailed records of the DPIA, including the methodology used, the identified risks, and the implemented mitigation measures.

Implementing Appropriate Technical and Organizational Measures:

Robust security is crucial.

- Specific examples of technical and organizational measures: Data encryption, access control mechanisms, regular security audits, staff training, and incident response plans.

- Best practices for data security: Implement a layered security approach, regularly update software and systems, and conduct penetration testing to identify vulnerabilities.

- Compliance checklist: Create a comprehensive checklist to ensure all necessary technical and organizational measures are in place and functioning effectively.

Ongoing Monitoring and Improvement:

Compliance is not a one-time event.

- Key performance indicators (KPIs) to monitor: Accuracy of AI decisions, fairness of outcomes, data breaches, and user complaints.

- Methods for identifying and addressing compliance gaps: Regularly review the AI system’s performance, conduct audits, and gather feedback from users.

- Processes for updating systems: Establish a clear process for updating the AI system and its associated documentation to reflect changes in technology, regulations, or business needs.

Conclusion

The new CNIL AI guidelines represent a significant step towards responsible AI development and deployment. By understanding and adhering to these guidelines, organizations can mitigate risks, protect user data, and foster trust in AI technologies. This practical guide has outlined key aspects of compliance, from understanding the scope to implementing necessary technical and organizational measures. Remember, ongoing monitoring and adaptation are crucial for maintaining compliance with evolving CNIL AI guidelines. Proactive engagement with these regulations is essential for responsible AI implementation. Ensure your organization is prepared by thoroughly reviewing and implementing these CNIL AI guidelines.

Featured Posts

-

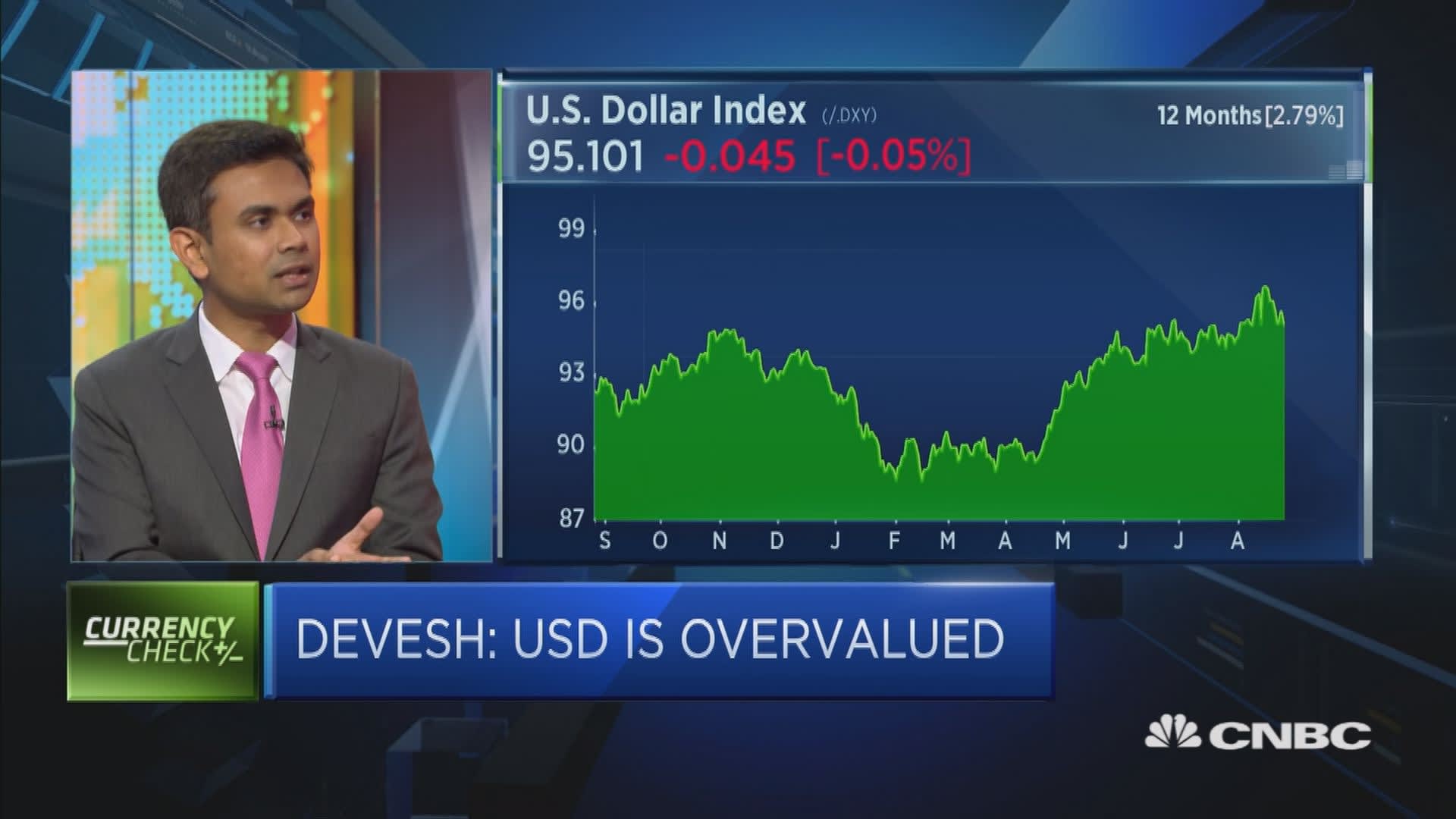

Minority Government Could Weaken Canadian Dollar Expert Analysis

Apr 30, 2025

Minority Government Could Weaken Canadian Dollar Expert Analysis

Apr 30, 2025 -

Feltri Sul Venerdi Santo Fede Dubbio E Riflessione

Apr 30, 2025

Feltri Sul Venerdi Santo Fede Dubbio E Riflessione

Apr 30, 2025 -

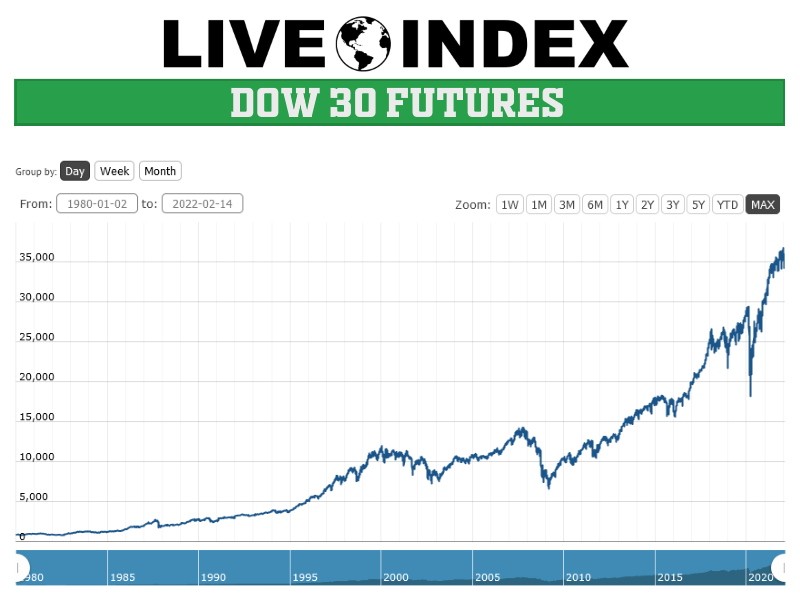

Live Stock Market Updates Dow Futures Key Earnings And Market Trends

Apr 30, 2025

Live Stock Market Updates Dow Futures Key Earnings And Market Trends

Apr 30, 2025 -

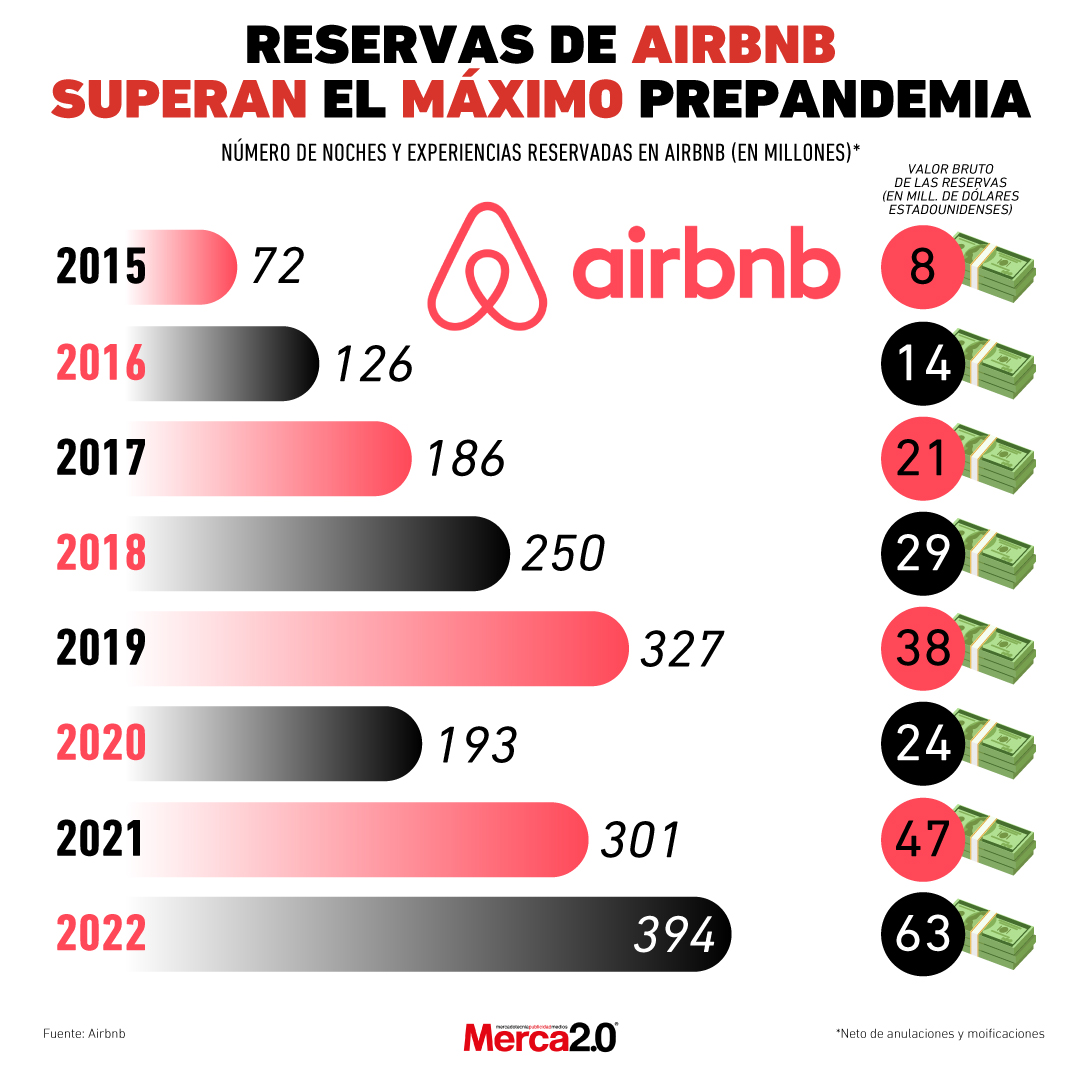

Canadian Staycations Soar Airbnb Bookings Up 20

Apr 30, 2025

Canadian Staycations Soar Airbnb Bookings Up 20

Apr 30, 2025 -

Rqm Qyasy Swysry Jdyd Shebyt Alraklyt Ttzayd

Apr 30, 2025

Rqm Qyasy Swysry Jdyd Shebyt Alraklyt Ttzayd

Apr 30, 2025