OpenAI Faces FTC Probe: Examining The Future Of AI Accountability

Table of Contents

The FTC Investigation: What We Know So Far

The FTC's investigation into OpenAI is focused on potential violations of consumer protection laws. While details remain limited due to the ongoing nature of the probe, the agency's concerns center on several key areas critical to AI accountability.

- Potential Violations of Consumer Protection Laws: The FTC alleges OpenAI's practices may violate consumer protection laws, potentially harming consumers through unfair or deceptive practices related to its AI models.

- Data Privacy Concerns: A major concern revolves around the vast amounts of data used to train OpenAI's models. The FTC is likely scrutinizing OpenAI's data collection practices, assessing whether they comply with existing privacy regulations and whether sufficient safeguards are in place to protect user data. This includes examining the transparency of data usage and consent mechanisms.

- Biased Outputs and Potential for Harm: The potential for AI bias to perpetuate societal inequalities is another critical concern. The FTC is likely investigating whether OpenAI has taken adequate steps to mitigate bias in its models and prevent the dissemination of harmful or discriminatory content. This involves assessing the fairness and equity of the algorithms and the potential for unintended consequences.

- Impact on OpenAI's Future: The outcome of this investigation could significantly impact OpenAI's future operations, potentially leading to significant fines, regulatory changes to their development practices, and a reassessment of their AI safety protocols. The investigation highlights the increasing pressure on AI companies to prioritize ethical considerations alongside technological advancements.

The Growing Need for AI Accountability

The OpenAI investigation underscores a broader, critical need for AI accountability within the tech industry. The ethical dilemmas posed by increasingly powerful AI models are complex and far-reaching.

- Ethical Dilemmas: AI systems, particularly large language models like those developed by OpenAI, present profound ethical challenges. These include the potential for bias amplification, the spread of misinformation, and the displacement of workers. Addressing these ethical issues is paramount for ensuring the responsible development and deployment of AI.

- The Importance of Regulatory Frameworks: The lack of clear and comprehensive regulatory frameworks for AI development creates significant risks. Establishing robust regulatory structures is crucial for promoting responsible innovation and mitigating potential harms associated with AI. This requires a balanced approach that fosters innovation while protecting consumers and society.

- AI Misuse and Safeguards: The potential for AI misuse, whether intentional or unintentional, is a significant concern. Strong safeguards, including robust security measures and ethical guidelines, are needed to prevent malicious applications and unintended negative consequences. This includes establishing clear lines of accountability for the use and misuse of AI systems.

Defining Responsible AI Development

Responsible AI development requires a fundamental shift in how AI systems are designed, developed, and deployed. This necessitates the adoption of key principles and best practices.

- Data Privacy and Security: Stringent data privacy and security measures are crucial. This involves implementing robust data protection protocols, obtaining informed consent, and ensuring the confidentiality and integrity of user data. Data minimization and anonymization techniques are also crucial.

- Fairness, Transparency, and Explainability: AI systems should be designed and developed to be fair, transparent, and explainable. This requires algorithms to be free from bias, their decision-making processes to be understandable, and their outcomes to be justifiable. This enhances trustworthiness and allows for proper oversight.

- Robust Testing and Validation: Thorough testing and validation processes are essential to identify and mitigate potential risks associated with AI systems. This includes rigorous testing for bias, safety, and security vulnerabilities, as well as ongoing monitoring and evaluation after deployment.

The Future of AI Regulation and Governance

The FTC's investigation foreshadows a likely shift towards more stringent AI regulation and governance. The future landscape will likely involve a combination of approaches.

- Regulatory Models: Various regulatory models are being considered, ranging from self-regulation by industry bodies to more direct government oversight. A hybrid approach, combining elements of both, might prove most effective.

- Challenges of Regulating Evolving Technologies: Regulating rapidly evolving AI technologies presents considerable challenges. Regulations must be flexible enough to adapt to technological advancements while maintaining their effectiveness. This requires continuous monitoring and adaptation of regulatory frameworks.

- International Implications: AI regulation also has significant international implications. Collaboration between countries is crucial to establish consistent standards and prevent regulatory arbitrage. Harmonizing global standards is key to effective AI governance.

The Impact on OpenAI and the Broader AI Landscape

The FTC probe will undoubtedly have far-reaching consequences for OpenAI and the broader AI landscape.

- Increased Scrutiny and Stricter Regulations: The investigation signals a likely increase in scrutiny for AI companies and the potential for stricter regulations. This could include more rigorous audits, enhanced transparency requirements, and stricter penalties for violations.

- Implications for Innovation and Investment: Increased regulation could potentially stifle innovation and reduce investment in the AI sector. However, a well-designed regulatory framework could also promote responsible innovation and attract investment in ethical AI development.

- Shifts in AI Development Practices: The probe may prompt shifts in AI development practices and priorities, with a greater emphasis on ethical considerations, transparency, and accountability. Companies may invest more heavily in AI safety research and implement stricter internal guidelines.

Conclusion

The FTC's investigation into OpenAI underscores the urgent need for robust AI accountability mechanisms. The future of AI hinges on establishing clear ethical guidelines and regulatory frameworks to ensure responsible innovation and mitigate potential harms. This probe serves as a stark reminder that the development and deployment of powerful AI systems must be guided by principles of fairness, transparency, and accountability. Moving forward, we need a collaborative effort involving researchers, developers, policymakers, and the public to navigate the complexities of AI accountability and build a future where AI benefits all of humanity. Let's demand greater AI accountability and work towards a responsible AI future.

Featured Posts

-

Tesla Q1 Profits Plunge Amid Musks Political Backlash

Apr 24, 2025

Tesla Q1 Profits Plunge Amid Musks Political Backlash

Apr 24, 2025 -

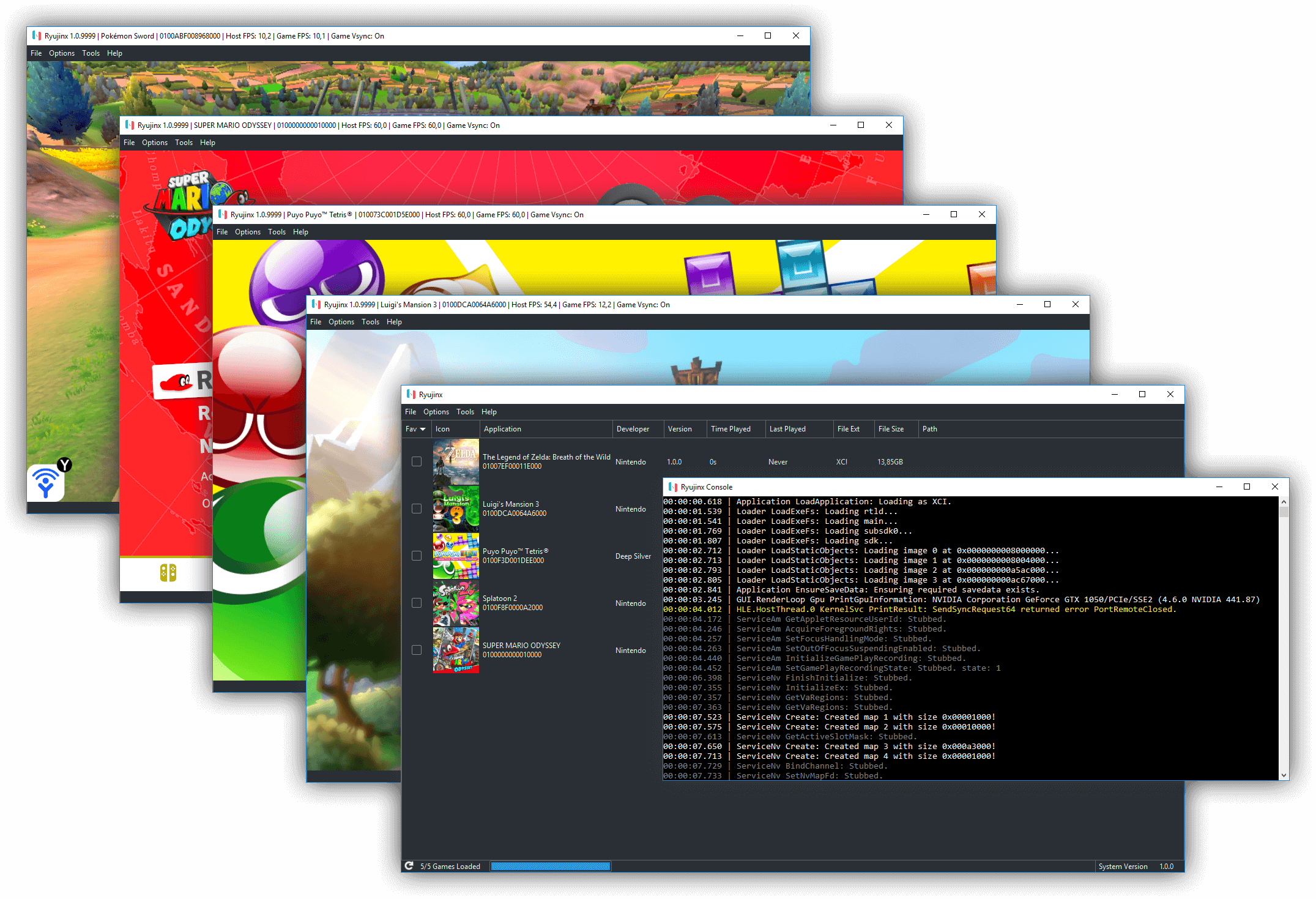

Ryujinx Emulator Development Halted Nintendo Contact Confirmed

Apr 24, 2025

Ryujinx Emulator Development Halted Nintendo Contact Confirmed

Apr 24, 2025 -

Tina Knowles Missed Mammogram Leads To Breast Cancer Diagnosis A Wake Up Call

Apr 24, 2025

Tina Knowles Missed Mammogram Leads To Breast Cancer Diagnosis A Wake Up Call

Apr 24, 2025 -

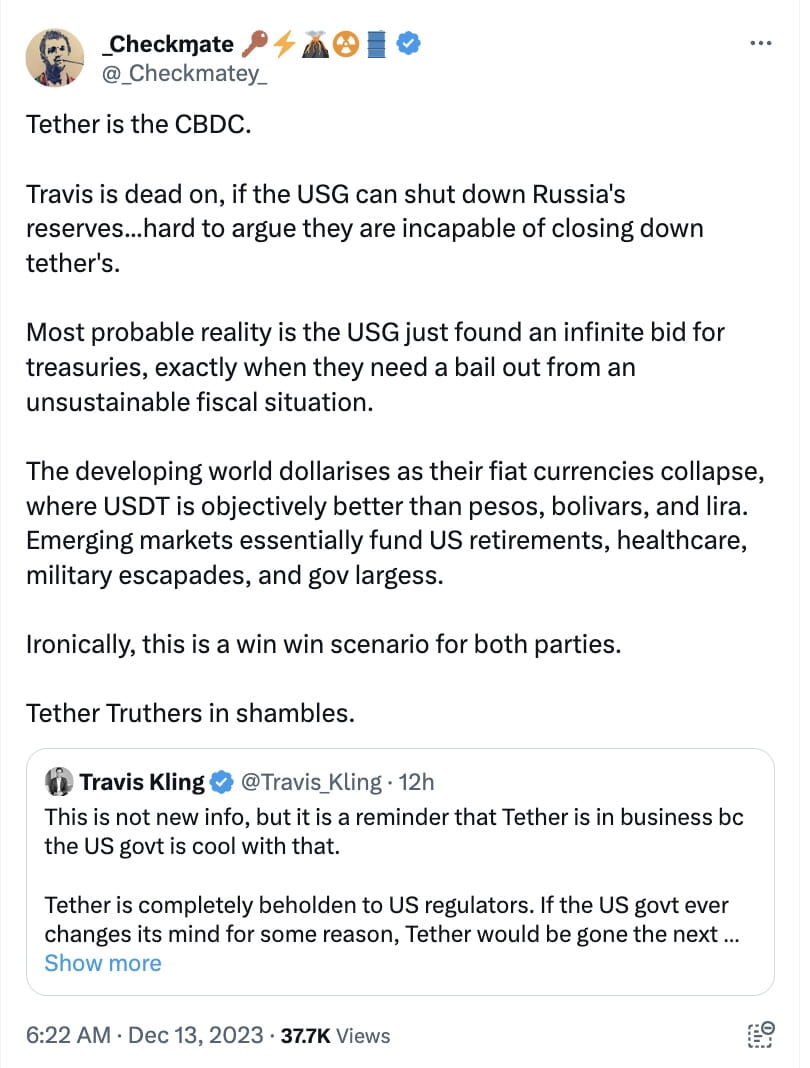

Cantor Fitzgerald In Talks For 3 Billion Crypto Spac With Tether And Soft Bank

Apr 24, 2025

Cantor Fitzgerald In Talks For 3 Billion Crypto Spac With Tether And Soft Bank

Apr 24, 2025 -

Trumps Transgender Sports Ban Faces Legal Challenge From Minnesota Ag

Apr 24, 2025

Trumps Transgender Sports Ban Faces Legal Challenge From Minnesota Ag

Apr 24, 2025